Introduction to VT-x Page-Modification Logging

本文将介绍VT-x中的Page-Modification Logging(PML)技术。

1. Motivation

在没有PML前,VMM要监控GPA的修改时,需要将EPT的页面结构设置为not-present或者read-only,这样会触发许多EPT violations,开销非常大。

PML建立在CPU对EPT中的accessed与dirty标志位支持上。

当启用PML时,对EPT中设置了dirty标志位的写操作都会产生一条in-memory记录,报告写操作的GPA,当记录写满时,触发一次VM Exit,然后VMM就可以监控被修改的页面。

2. Introduction

PML is a new feature on Intel’s Boardwell server platfrom targeted to reduce overhead of dirty logging mechanism.

The specification can be found at: Page Modification Logging for Virtual Machine Monitor White Paper

Currently, dirty logging is done by write protection, which write protects guest memory, and mark dirty GFN to dirty_bitmap in subsequent write fault. This works fine, except with overhead of additional write fault for logging each dirty GFN. The overhead can be large if the write operations from guest is intensive.

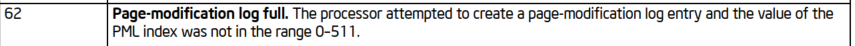

PML is a hardware-assisted efficient way for dirty logging. PML logs dirty GPA automatically to a 4K PML memory buffer when CPU changes EPT table’s D-bit from 0 to 1. To do this, A new 4K PML buffer base address, and a PML index were added to VMCS. Initially PML index is set to 512 (8 bytes for each GPA), and CPU decreases PML index after logging one GPA, and eventually a PML buffer full VMEXIT happens when PML buffer is fully logged.

With PML, we don’t have to use write protection so the intensive write fault EPT violation can be avoided, with an additional PML buffer full VMEXIT for 512 dirty GPAs. Theoretically, this can reduce hypervisor overhead when guest is in dirty logging mode, and therefore more CPU cycles can be allocated to guest, so it’s expected benchmarks in guest will have better performance comparing to non-PML.

3. KVM design

3.1 Enable/Disable PML

PML is per-vcpu (per-VMCS), while EPT table can be shared by vcpus, so we need to enable/disable PML for all vcpus of guest. A dedicated 4K page will be allocated for each vcpu when PML is enabled for that vcpu.

Currently, we choose to always enable PML for guest, which means we enables PML when creating VCPU, and never disable it during guest’s life time. This avoids the complicated logic to enable PML by demand when guest is running. And to eliminate potential unnecessary GPA logging in non-dirty logging mode, we set D-bit manually for the slots with dirty logging disabled(KVM: MMU: Explicitly set D-bit for writable spte.).

3.2 Flush PML buffer

When userspace querys dirty_bitmap, it’s possible that there are GPAs logged in vcpu’s PML buffer, but as PML buffer is not full, so no VMEXIT happens. In this case, we’d better to manually flush PML buffer for all vcpus and update the dirty GPAs to dirty_bitmap.

We do PML buffer flush at the beginning of each VMEXIT, this makes dirty_bitmap more updated, and also makes logic of flushing PML buffer for all vcpus easier– we only need to kick all vcpus out of guest and PML buffer for each vcpu will be flushed automatically.

1 | /* |

参考资料: