源码解析:vhost ioeventfd与irqfd

文章目录

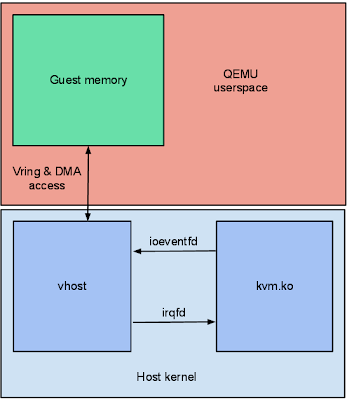

本文将结合qemu与linux源码,解析vhost中ioeventfd与irqfd相关内容。

prerequisite

- QEMU Internals: vhost architecture

- Dive into ioeventfd(KVM side) mechanism

- Dive into irqfd(KVM side) mechanism

overview

ioeventfd与kick绑定,irqfd与中断绑定

ioeventfd:

- qemu利用

KVM_IOEVENTFDioctl,将ioeventfd与guest kick寄存器的地址(pio/mmio地址)和vq index的值绑定,传给kvm- 当kvm检测到guest往kick寄存器写入vq index后,写eventfd通知vhost

- qemu利用

VHOST_SET_VRING_KICKioctl,将ioeventfd传给vhost,vhost就会poll ioeventfd的写- 当vhost poll到ioeventfd的写后,就会开始从avai ring中拉取请求,处理完io请求后,更新used ring,最后给guest注入中断(需要借助于irqfd)

irqfd:

- qemu利用

VHOST_SET_VRING_CALLioctl,将irqfd传给vhost- vhost在更新完used ring后,写eventfd通知kvm注入中断

- qemu利用

KVM_IRQFDioctl,将irqfd与vq的中断绑定,传给kvm,kvm就会poll irqfd的写- 当kvm poll到irqfd的写后,就会根据中断路由信息,给guest注入中断

ioeventfd

qemu侧ioeventfd的关联

1 | virtio_bus_start_ioeventfd |

1 | vhost_virtqueue_start |

1 | struct VirtQueue |

由上述代码可知,qemu侧通过host_notifier的ioeventfd,将vhost与kvm关联了起来;

- vhost负责poll ioeventfd

- kvm负责写ioeventfd来通知vhost guest的kick操作

kvm侧ioeventfd处理

参考Dive into ioeventfd(KVM side) mechanism即可。

vhost侧ioeventfd处理

1 | long vhost_vring_ioctl(struct vhost_dev *d, unsigned int ioctl, void __user *argp) |

qemu利用VHOST_SET_VRING_KICK ioctl,将ioeventfd传给vhost,然后vhost就开始poll ioeventfd(vhost_poll_start)。

1 | /* Start polling a file. We add ourselves to file's wait queue. The caller must |

当vhost poll到ioeventfd写后,就会触发vhost_poll_wakeup回调。1

2

3

4

5

6vhost_poll_wakeup

└── vhost_poll_queue

└── vhost_vq_work_queue

└── vhost_worker_queue

├── llist_add(&work->node, &worker->work_list)

└── vhost_task_wake(worker->vtsk)

worker->vtsk又会如何操作呢?且看worker->vtsk的初始化情况以及worker->vtsk的执行函数吧。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49static struct vhost_worker *vhost_worker_create(struct vhost_dev *dev)

{

struct vhost_worker *worker;

struct vhost_task *vtsk;

char name[TASK_COMM_LEN];

int ret;

u32 id;

worker = kzalloc(sizeof(*worker), GFP_KERNEL_ACCOUNT);

if (!worker)

return NULL;

snprintf(name, sizeof(name), "vhost-%d", current->pid);

vtsk = vhost_task_create(vhost_worker, worker, name);

if (!vtsk)

goto free_worker;

mutex_init(&worker->mutex);

init_llist_head(&worker->work_list);

worker->kcov_handle = kcov_common_handle();

worker->vtsk = vtsk;

...

}

static bool vhost_worker(void *data)

{

struct vhost_worker *worker = data;

struct vhost_work *work, *work_next;

struct llist_node *node;

node = llist_del_all(&worker->work_list);

if (node) {

__set_current_state(TASK_RUNNING);

node = llist_reverse_order(node);

/* make sure flag is seen after deletion */

smp_wmb();

llist_for_each_entry_safe(work, work_next, node, node) {

clear_bit(VHOST_WORK_QUEUED, &work->flags);

kcov_remote_start_common(worker->kcov_handle);

work->fn(work); //vq->handle_kick

kcov_remote_stop();

cond_resched();

}

}

return !!node;

}

在初始化过程中,vhost会创建一个名为vhost-$pid的内核线程,其中$pid是QEMU进程的pid。该线程被称为“vhost工作线程”。

vhost工作线程的运行函数为vhost_worker,而vhost_worker就会触发vq->handle_kick的回调。

那么vhost_poll与vq->handle_kick等又是如何初始化的呢?

1 | void vhost_dev_init(struct vhost_dev *dev, |

vhost设备初始化时,会为每个vq调用vhost_poll_init来初始化vhost_poll与vq->handle_kick等内容。

1 | /* Init poll structure */ |

1 | /* The virtqueue structure describes a queue attached to a device. */ |

总结下vhost侧poll ioeventfd的流程:

vhost_poll_func会让vhost poll ioeventfd,加入到file’s wait queue中- kvm写ioeventfd通知vhost

- vhost回调

vhost_poll_wakeup,将work加入到workqueue中,唤醒vhost工作线程 - vhost工作线程回调

vq->handle_kick,处理vq中的io请求

irqfd

qemu侧irqfd的关联

1 | virtio_pci_set_guest_notifiers |

1 | vhost_virtqueue_start |

1 | struct VirtQueue |

由上述代码可知,qemu侧通过guest_notifier的irqfd,将vhost与kvm关联了起来;

- kvm负责poll irqfd,然后给vm注入中断

- vhost在更新完used ring后,写irqfd来通知kvm注入中断

kvm侧irqfd处理

参考Dive into irqfd(KVM side) mechanism即可。

vhost侧irqfd处理

1 | long vhost_vring_ioctl(struct vhost_dev *d, unsigned int ioctl, void __user *argp) |

qemu调用VHOST_SET_VRING_CALL,将irqfd传递给vhost

1 | /* This actually signals the guest, using eventfd. */ |

vhost在处理完io请求,并更新used ring后,调用vhost_signal,触发irqfd的写;kvm poll到后,就会给vm注入中断。