Understanding the Linux Kernel 读书笔记 -Kernel Synchronization

文章目录

You could think of the kernel as a server that answers requests; these requests can come either from a process running on a CPU or an external device issuing an interrupt request. We make this analogy to underscore that parts of the kernel are not run serially, but in an interleaved way. Thus, they can give rise to race conditions, which must be controlled through proper synchronization techniques.

1 How the Kernel Services Requests

In the rest of this chapter, we will generally denote as “exceptions” both the system calls and the usual exceptions.

1.1 Kernel Preemption

内核抢占

In nonpreemptive kernels, the current process cannot be replaced unless it is about to switch to User Mode. Therefore, the main characteristic of a preemptive kernel is that a process running in Kernel Mode can be replaced by another process while in the middle of a kernel function.

The main motivation for making a kernel preemptive is to reduce the dispatch latency of the User Mode processes, that is, the delay between the time they become runnable and the time they actually begin running.

The kernel can be preempted only when it is executing an exception handler (in particular a system call) and the kernel preemption has not been explicitly disabled. The local CPU must have local interrupts enabled, otherwise kernel preemption is not performed.

Kernel preemption may happen either when a kernel control path (usually, an interrupt handler) is terminated, or when an exception handler reenables kernel preemption by means of preempt_enable().

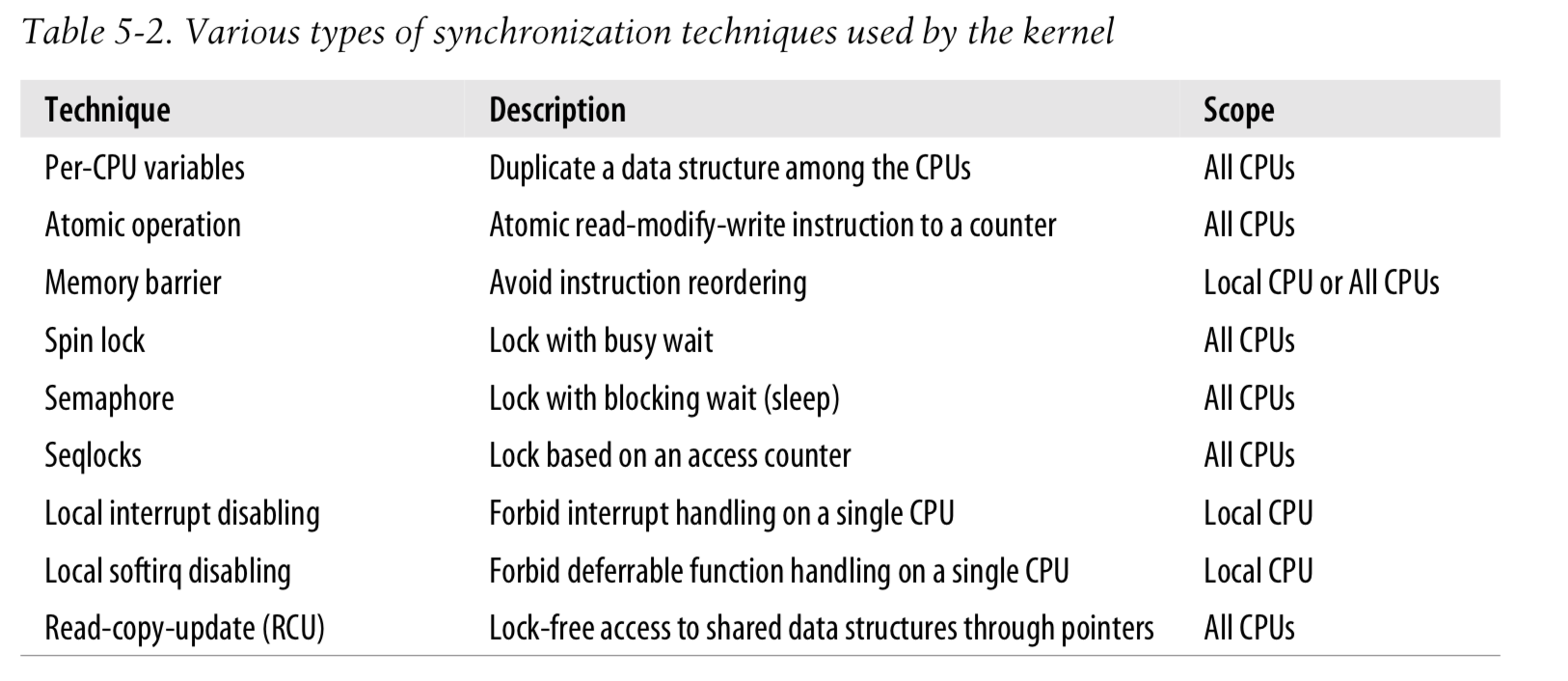

2 Synchronization Primitives

2.1 Per-CPU Variables

The simplest and most efficient synchronization technique consists of declaring kernel variables as per-CPU variables. Basically, a per-CPU variable is an array of data structures, one element per each CPU in the system. However, that the per-CPU variables can be used only in particular cases—basically, when it makes sense to logically split the data across the CPUs of the system.

Furthermore, per-CPU variables are prone to race conditions caused by kernel preemption, both in uniprocessor and multiprocessor systems. As a general rule, a kernel control path should access a per-CPU variable with kernel preemption disabled.

2.2 Atomic Operations

Several assembly language instructions are of type “read-modify-write”—that is, they access a memory location twice, the first time to read the old value and the second time to write a new value.

The easiest way to prevent race conditions due to “read-modify-write” instructions is by ensuring that such operations are atomic at the chip level. Every such operation must be executed in a single instruction without being interrupted in the middle and avoiding accesses to the same memory location by other CPUs.

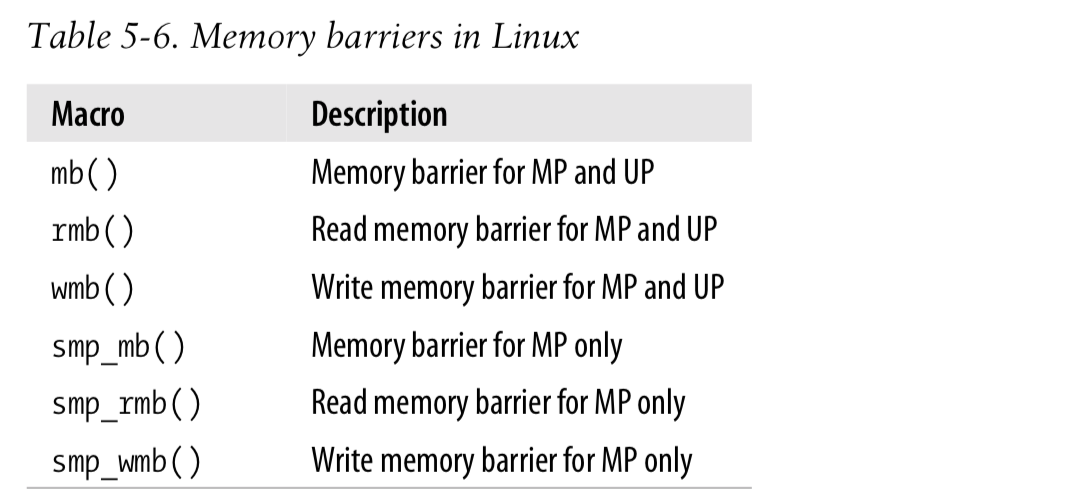

2.3 Optimization and Memory Barriers

When using optimizing compilers, you should never take for granted that instructions will be performed in the exact order in which they appear in the source code. For example, a compiler might reorder the assembly language instructions in such a way to optimize how registers are used. Moreover, modern CPUs usually execute several instructions in parallel and might reorder memory accesses. These kinds of reordering can greatly speed up the program.

When dealing with synchronization, however, reordering instructions must be avoided. Things would quickly become hairy if an instruction placed after a synchronization primitive is executed before the synchronization primitive itself. Therefore, all synchronization primitives act as optimization and memory barriers.

An optimization barrier primitive ensures that the assembly language instructions corresponding to C statements placed before the primitive are not mixed by the compiler with assembly language instructions corresponding to C statements placed after the primitive. In Linux the barrier() macro acts as an optimization barrier.

A memory barrier primitive ensures that the operations placed before the primitive are finished before starting the operations placed after the primitive.

Notice that in multiprocessor systems, all atomic operations described in the earlier section “Atomic Operations” act as memory barriers.

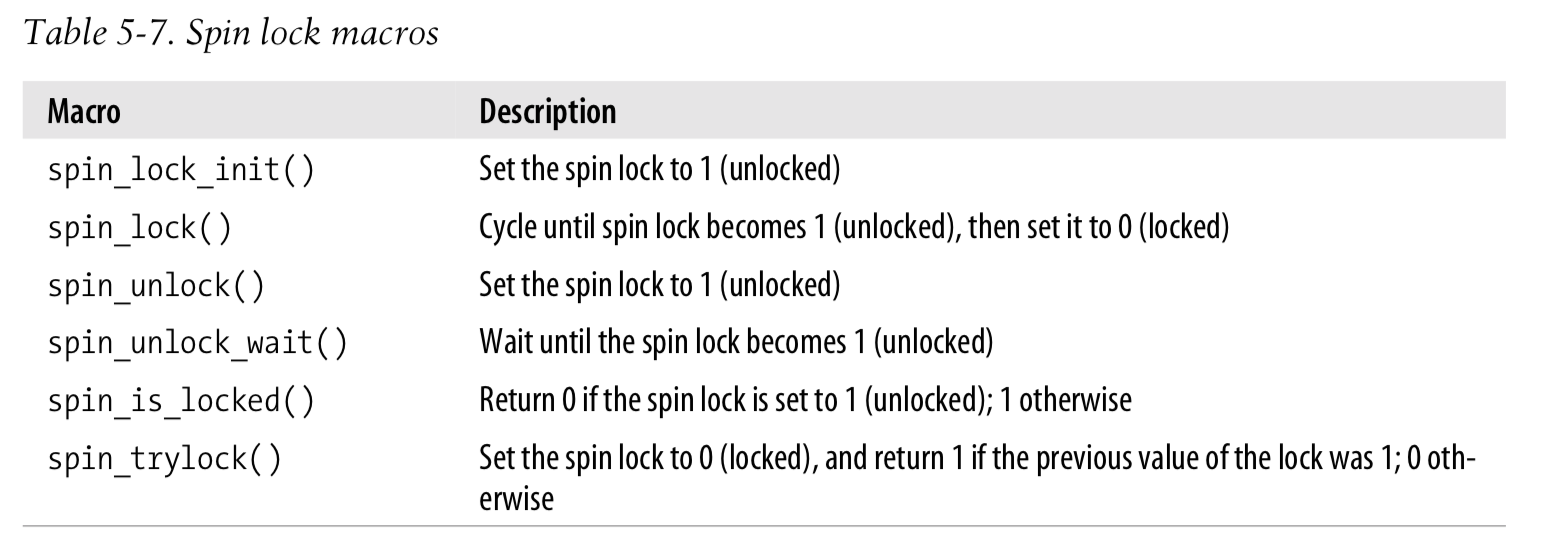

2.4 Spin Locks

Spin locks are a special kind of lock designed to work in a multiprocessor environment. If the kernel control path finds the spin lock “open,” it acquires the lock and continues its execution. Conversely, if the kernel control path finds the lock “closed” by a kernel control path running on another CPU, it “spins” around, repeatedly executing a tight instruction loop, until the lock is released.

The instruction loop of spin locks represents a “busy wait.” The waiting kernel control path keeps running on the CPU, even if it has nothing to do besides waste time. Nevertheless, spin locks are usually convenient, because many kernel resources are locked for a fraction of a millisecond only; therefore, it would be far more time-consuming to release the CPU and reacquire it later.

As a general rule, kernel preemption is disabled in every critical region protected by spin locks. In the case of a uniprocessor system, the locks themselves are useless, and the spin lock primitives just disable or enable the kernel preemption. Please notice that kernel preemption is still enabled during the busy wait phase, thus a process waiting for a spin lock to be released could be replaced by a higher priority process.

2.5 Read/Write Spin Locks

Read/write spin locks have been introduced to increase the amount of concurrency inside the kernel. They allow several kernel control paths to simultaneously read the same data structure, as long as no kernel control path modifies it. If a kernel control path wishes to write to the structure, it must acquire the write version of the read/write lock, which grants exclusive access to the resource. Of course, allowing concurrent reads on data structures improves system performance.

Getting and releasing a lock for reading

read_lockread_unlock

Getting and releasing a lock for writing

write_lockwrite_unlock

2.6 Seqlocks

When using read/write spin locks, requests issued by kernel control paths to perform a read_lock or a write_lock operation have the same priority: readers must wait until the writer has finished and, similarly, a writer must wait until all readers have finished.

Seqlocks are similar to read/write spin locks, except that they give a much higher priority to writers: in fact a writer is allowed to proceed even when readers are active. The good part of this strategy is that a writer never waits (unless another writer is active); the bad part is that a reader may sometimes be forced to read the same data several times until it gets a valid copy.

The critical regions of the readers should be short and writers should seldom acquire the seqlock

2.7 Read-Copy Update (RCU)

深入理解 Linux 的 RCU 机制

Read-copy update (RCU) is yet another synchronization technique designed to protect data structures that are mostly accessed for reading by several CPUs. RCU is lock-free, that is, it uses no lock or counter shared by all CPUs; this is a great advantage over read/write spin locks and seqlocks, which have a high overhead due to cache line-snooping and invalidation.

How does RCU obtain the surprising result of synchronizing several CPUs without shared data structures? The key idea consists of limiting the scope of RCU as follows:

- Only data structures that are dynamically allocated and referenced by means of pointers can be protected by RCU.

- No kernel control path can sleep inside a critical region protected by RCU.

2.8 Semaphores

Essentially, Semaphores implement a locking primitive that allows waiters to sleep until the desired resource becomes free.

Actually, Linux offers two kinds of semaphores:

- Kernel semaphores, which are used by kernel control paths

- System V IPC semaphores, which are used by User Mode processes

In this section, we focus on kernel semaphores.

A kernel semaphore is similar to a spin lock, in that it doesn’t allow a kernel control path to proceed unless the lock is open. However, whenever a kernel control path tries to acquire a busy resource protected by a kernel semaphore, the corresponding process is suspended. It becomes runnable again when the resource is released. Therefore, kernel semaphores can be acquired only by functions that are allowed to sleep; interrupt handlers and deferrable functions cannot use them.

The init_MUTEX() and init_MUTEX_LOCKED() functions may be used to initialize a semaphore for exclusive access.

Getting and releasing semaphores

up()down()

2.9 Read/Write Semaphores

Read/write semaphores are similar to the read/write spin locks described earlier in the section “Read/Write Spin Locks,” except that waiting processes are suspended instead of spinning until the semaphore becomes open again.

Many kernel control paths may concurrently acquire a read/write semaphore for reading; however, every writer kernel control path must have exclusive access to the protected resource. Therefore, the semaphore can be acquired for writing only if no other kernel control path is holding it for either read or write access. Read/write semaphores improve the amount of concurrency inside the kernel and improve overall system performance.

The down_read() and down_write() functions acquire the read/write semaphore for reading and writing, respectively. Similarly, the up_read() and up_write() functions release a read/write semaphore previously acquired for reading and for writing.

2.10 Completions

Linux 2.6 also makes use of another synchronization primitive similar to semaphores: completions. They have been introduced to solve a subtle race condition that occurs in multiprocessor systems when process A allocates a temporary semaphore variable, initializes it as closed MUTEX, passes its address to process B, and then invokes down() on it. Process A plans to destroy the semaphore as soon as it awakens. Later on, process B running on a different CPU invokes up() on the semaphore. However, in the current implementation up() and down() can execute concurrently on the same semaphore. Thus, process A can be woken up and destroy the temporary semaphore while process B is still executing the up() function. As a result, up()might attempt to access a data structure that no longer exists.

2.11 Local Interrupt Disabling

Interrupt disabling is one of the key mechanisms used to ensure that a sequence of kernel statements is treated as a critical section. It allows a kernel control path to continue executing even when hardware devices issue IRQ signals, thus providing an effective way to protect data structures that are also accessed by interrupt handlers. By itself, however, local interrupt disabling does not protect against concurrent accesses to data structures by interrupt handlers running on other CPUs, so in multi-processor systems, local interrupt disabling is often coupled with spin locks.

The local_irq_disable() macro disables interrupts on the local CPU. The local_irq_enable() macro enables them.

2.12 Disabling and Enabling Deferrable Functions

Deferrable functions can be executed at unpredictable times (essentially, on termination of hardware interrupt handlers). Therefore, data structures accessed by deferrable functions must be protected against race conditions.

The kernel sometimes needs to disable deferrable functions without disabling interrupts. Local deferrable functions can be enabled or disabled on the local CPU by acting on the softirq counter stored in the preempt_count field of the current’s thread_info descriptor.

3 Synchronizing Accesses to Kernel Data Structures

Usually, the following rule of thumb is adopted by kernel developers: always keep the concurrency level as high as possible in the system.

In turn, the concurrency level in the system depends on two main factors:

- The number of I/O devices that operate concurrently

- The number of CPUs that do productive work

To maximize I/O throughput, interrupts should be disabled for very short periods of time. To use CPUs efficiently, synchronization primitives based on spin locks should be avoided whenever possible.

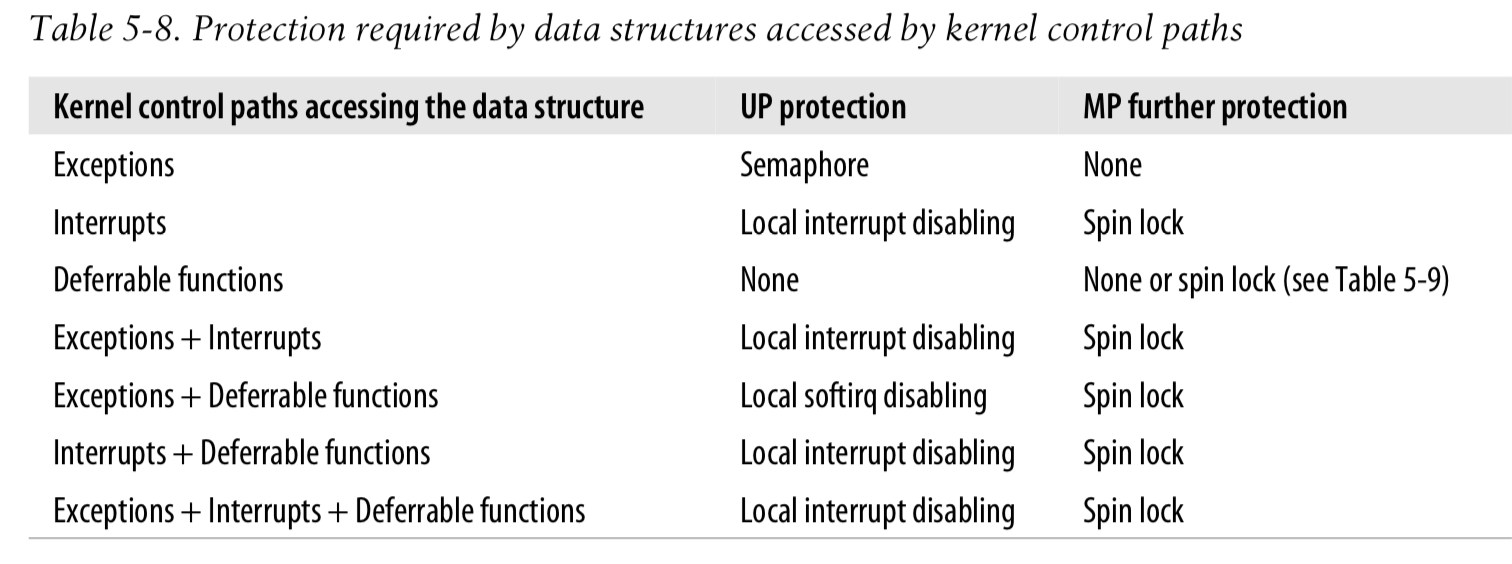

3.1 Choosing Among Spin Locks, Semaphores, and Interrupt Disabling

Generally speaking, choosing the synchronization primitives depends on what kinds of kernel control paths access the data structure, as shown in Table 5-8. Remember that whenever a kernel control path acquires a spin lock (as well as a read/write lock, a seqlock, or a RCU “read lock”), disables the local interrupts, or disables the local softirqs, kernel preemption is automatically disabled.