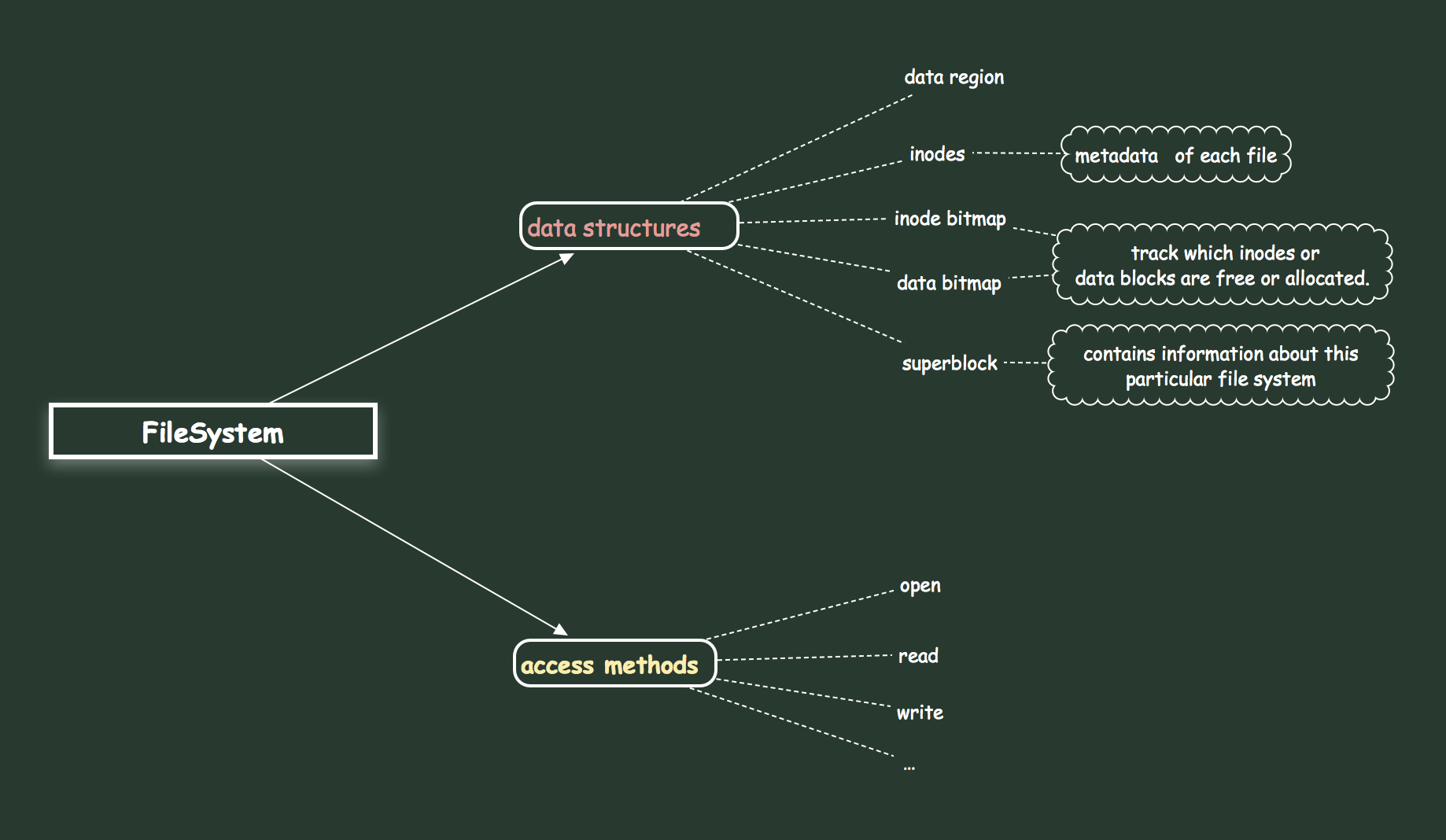

文件系统概述

文件系统是纯软件。文件系统的具体定位可以参考Linux Storage Stack Diagram和Linux存储I/O栈

File System Implementation很好地阐述了文件系统的实现。

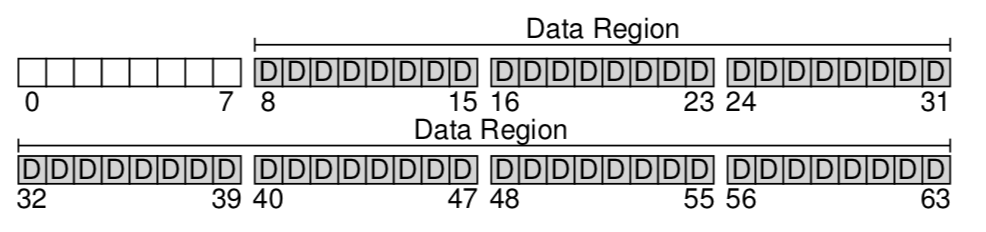

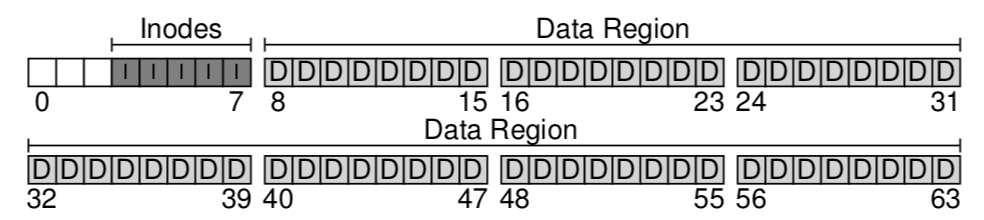

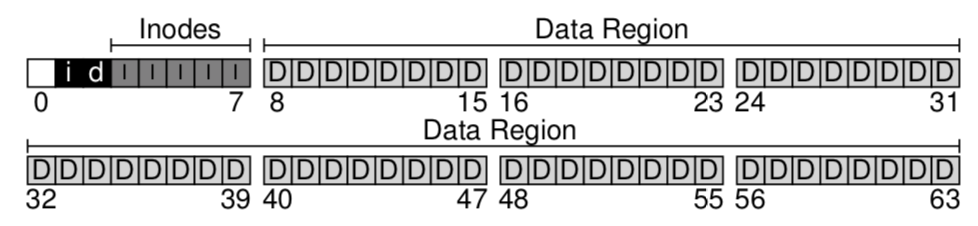

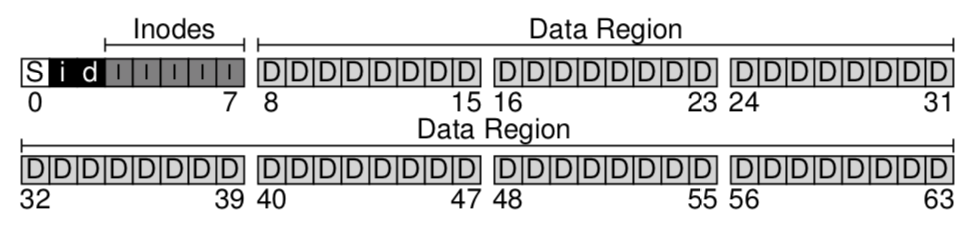

- data region

- inodes

- inode bitmap and data bitmap

- superblock

The superblock contains information about this particular file system, including, for example, how many inodes and data blocks are in the file system, where the inode table begins and so forth.

Fast File System (FFS)

详情可参见Fast File System.

The idea was to design the file system structures and allocation policies to be “disk aware” and thus improve performance.

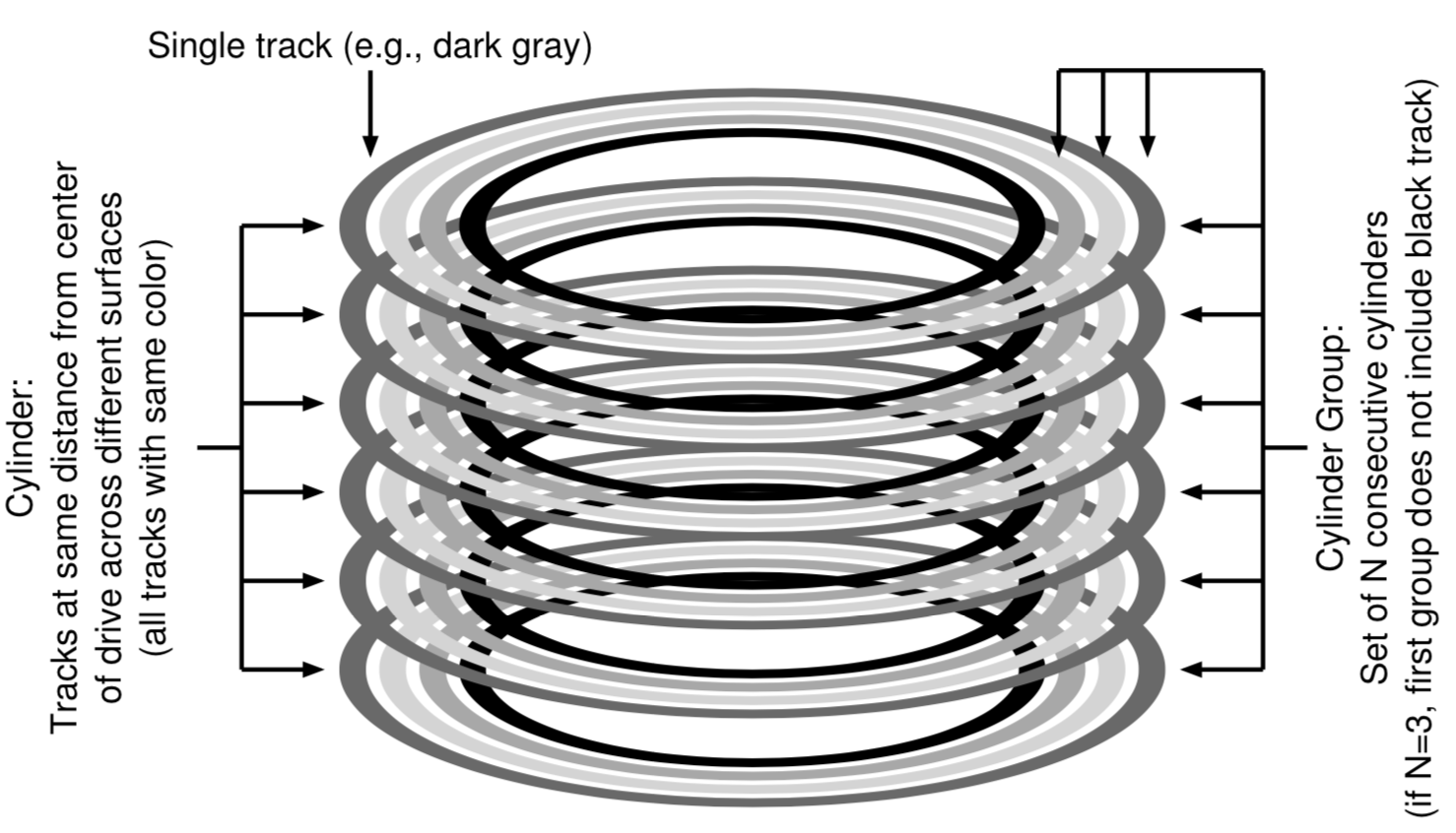

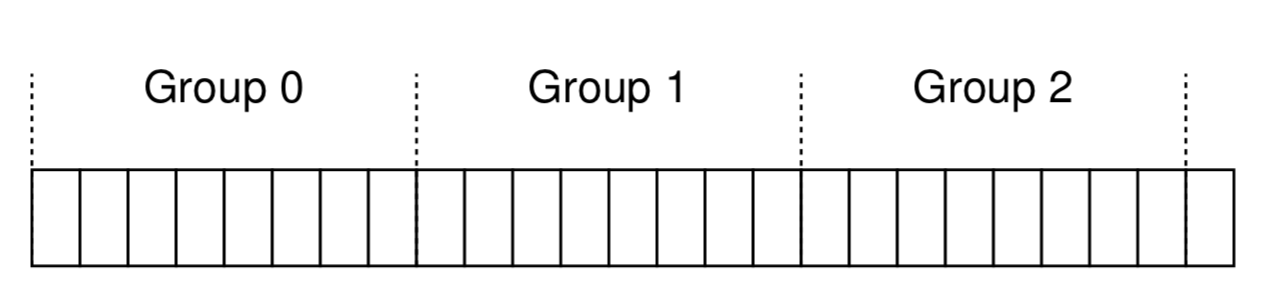

FFS divides the disk into a number of cylinder groups.

Modern file systems (such as Linux ext2, ext3, and ext4) instead organize the drive into block groups, each of which is just a consecutive portion of the disk’s address space.

By placing two files within the same group, FFS can ensure that accessing one after the other will not result in long seeks across the disk.

FFS has to decide what is “related” and place it within the same block group.

All modern systems account for the main lesson of FFS: treat the disk like it’s a disk.

crash-consistency problem

详情可参见FSCK and Journaling.

One major challenge faced by a file system is how to update persistent data structures despite the presence of a power loss or system crash.

This problem is quite simple to understand. Imagine you have to update two on-disk structures, A and B, in order to complete a particular operation. Because the disk only services a single request at a time, one of these requests will reach the disk first (either A or B). If the system crashes or loses power after one write completes, the on-disk structure will be left in an inconsistent state. And thus, we have a problem that all file systems need to solve.

Journaling (also known as write-ahead logging) is a technique which adds a little bit of overhead to each write but recovers more quickly from crashes or power losses.

Log-structured File Systems

本质上是将随机写转换为顺序写,详情可参见Log-structured File Systems。

LFS introduces a new approach to updating the disk. Instead of overwriting files in places, LFS always writes to an unused portion of the disk, and then later reclaims that old space through cleaning. LFS can gather all updates into an in-memory segment and then write them out together sequentially.

The downside to this approach is that it generates garbage; old copies of the data are scattered throughout the disk, and if one wants to reclaim such space for subsequent usage, one must clean old segments periodically.