The usage of VFIO

文章目录

1. prerequisite

建议阅读Alex的slides:An Introduction to PCI Device Assignment with VFIO,不妨多读几遍。若有兴趣,可以观看视频。

2. device, group, container

2.1 device

Device 指的是我们要操作的硬件设备。

2.2 group

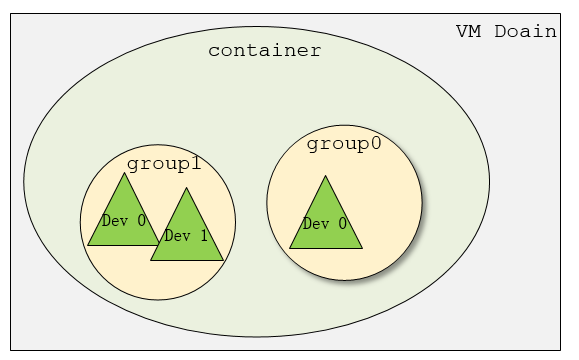

Group 是IOMMU能够进行DMA隔离的最小硬件单元,一个group内可能只有一个device,也可能有多个device,这取决于物理平台上硬件的IOMMU拓扑结构。 设备直通的时候一个group里面的设备必须都直通给一个虚拟机。 不能够让一个group里的多个device分别从属于2个不同的VM,也不允许部分device在host上而另一部分被分配到guest里。 另外,VFIO中的group和iommu group可以认为是同一个概念。

2.3 container

While the group is the minimum granularity that must be used to ensure secure user access, it’s not necessarily the preferred granularity. In IOMMUs which make use of page tables, it may be possible to share a set of page tables between different groups, reducing the overhead both to the platform (reduced TLB thrashing, reduced duplicate page tables), and to the user (programming only a single set of translations). For this reason, VFIO makes use of a container class, which may hold one or more groups. A container is created by simply opening the /dev/vfio/vfio character device.

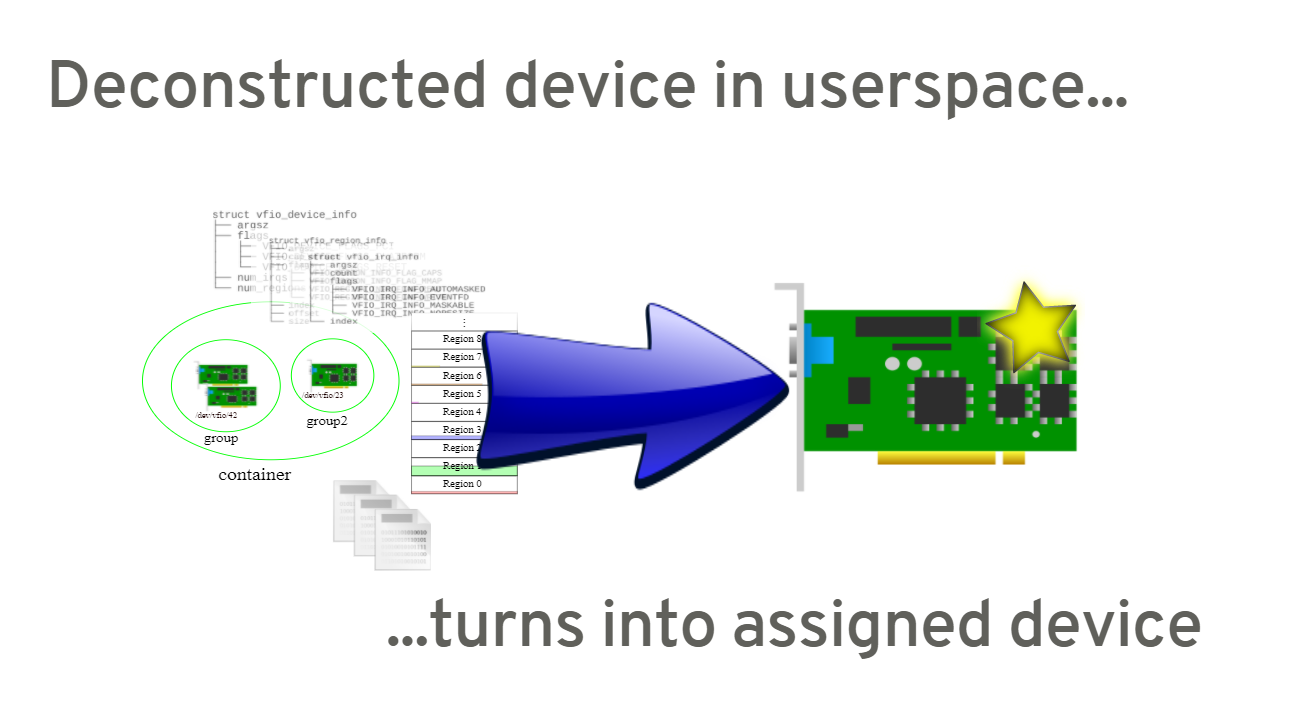

从上图可以看出,一个或多个device从属于某个group,而一个或多个group又从属于一个container。 如果要将一个device直通给VM,那么先要找到这个设备从属的iommu group,然后将整个group加入到container中即可。

3. VFIO Usage Example

To check device subordination, check all IOMMU groups by looking into below sysfs, and identify the device to pass-through. Below example shows IOMMU group 3 has two devices at 0:14.0 and 0:14.2.

1 | $ tree /sys/kernel/iommu_groups/ |

Assume user wants to access PCI device 0000:00:02.0:

$ readlink /sys/bus/pci/devices/0000:00:02.0/iommu_group

../../../kernel/iommu_groups/1

This device is therefore in IOMMU group 1. This device is on the pci bus, therefore the user will make use of vfio-pcito manage the group:

# modprobe vfio-pci

Binding this device to the vfio-pci driver creates the VFIO group character devices for this group:

$ lspci -n -s 0000:00:02.0

00:02.0 0300: 8086:5916 (rev 02)

# echo 0000:00:02.0 > /sys/bus/pci/devices/0000:00:02.0/driver/unbind

# echo 8086 5916 > /sys/bus/pci/drivers/vfio-pci/new_id

Now we need to look at what other devices are in the group to free it for use by VFIO:

$ ls -l /sys/bus/pci/devices/0000:00:02.0/iommu_group/devices

total 0

lrwxrwxrwx 1 root root 0 6月 29 10:54 0000:00:02.0 -> ../../../../devices/pci0000:00/0000:00:02.0

No other devices are in the group.

The final step is to provide the user with access to the group if unprivileged operation is desired (note that /dev/vfio/vfio provides no capabilities on its own and is therefore expected to be set to mode 0666 by the system):

# chown user:user /dev/vfio/1

The user now has full access to all the devices and the iommu for this group and can access them as follows:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72int container, group, device, i;

struct vfio_group_status group_status =

{ .argsz = sizeof(group_status) };

struct vfio_iommu_type1_info iommu_info = { .argsz = sizeof(iommu_info) };

struct vfio_iommu_type1_dma_map dma_map = { .argsz = sizeof(dma_map) };

struct vfio_device_info device_info = { .argsz = sizeof(device_info) };

/* Create a new container */

container = open("/dev/vfio/vfio", O_RDWR);

if (ioctl(container, VFIO_GET_API_VERSION) != VFIO_API_VERSION)

/* Unknown API version */

if (!ioctl(container, VFIO_CHECK_EXTENSION, VFIO_TYPE1_IOMMU))

/* Doesn't support the IOMMU driver we want. */

/* Open the group */

group = open("/dev/vfio/1", O_RDWR);

/* Test the group is viable and available */

ioctl(group, VFIO_GROUP_GET_STATUS, &group_status);

if (!(group_status.flags & VFIO_GROUP_FLAGS_VIABLE))

/* Group is not viable (ie, not all devices bound for vfio) */

/* Add the group to the container */

ioctl(group, VFIO_GROUP_SET_CONTAINER, &container);

/* Enable the IOMMU model we want */

ioctl(container, VFIO_SET_IOMMU, VFIO_TYPE1_IOMMU);

/* Get addition IOMMU info */

ioctl(container, VFIO_IOMMU_GET_INFO, &iommu_info);

/* Allocate some space and setup a DMA mapping */

dma_map.vaddr = mmap(0, 1024 * 1024, PROT_READ | PROT_WRITE,

MAP_PRIVATE | MAP_ANONYMOUS, 0, 0);

dma_map.size = 1024 * 1024;

dma_map.iova = 0; /* 1MB starting at 0x0 from device view */

dma_map.flags = VFIO_DMA_MAP_FLAG_READ | VFIO_DMA_MAP_FLAG_WRITE;

ioctl(container, VFIO_IOMMU_MAP_DMA, &dma_map);

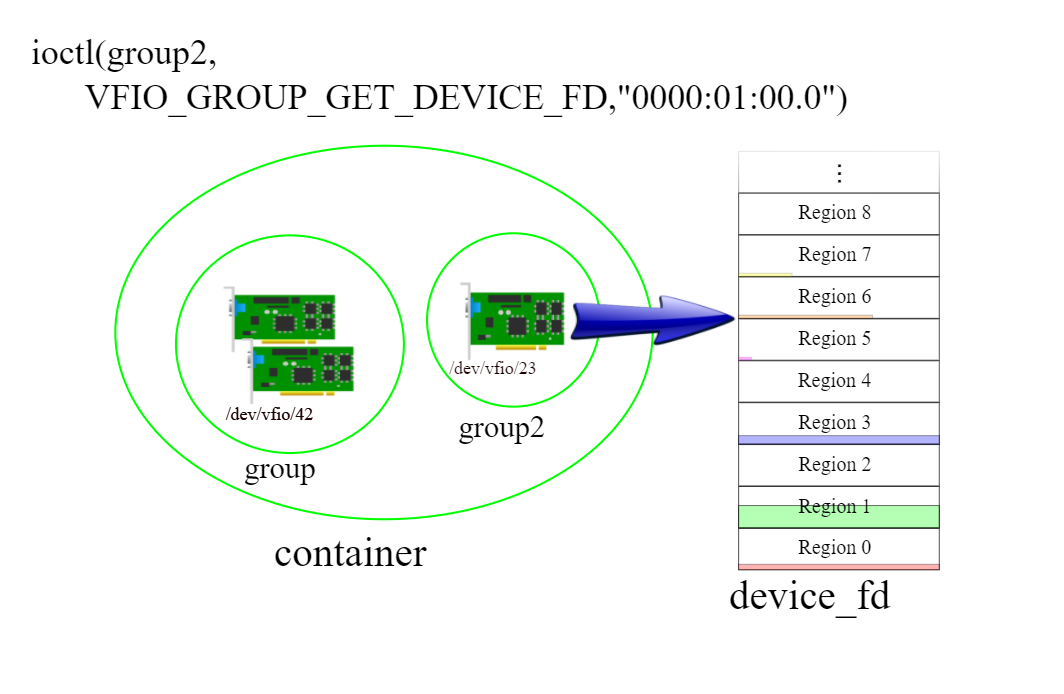

/* Get a file descriptor for the device */

device = ioctl(group, VFIO_GROUP_GET_DEVICE_FD, "0000:00:02.0");

/* Test and setup the device */

ioctl(device, VFIO_DEVICE_GET_INFO, &device_info);

for (i = 0; i < device_info.num_regions; i++) {

struct vfio_region_info reg = { .argsz = sizeof(reg) };

reg.index = i;

ioctl(device, VFIO_DEVICE_GET_REGION_INFO, ®);

/* Setup mappings... read/write offsets, mmaps

* For PCI devices, config space is a region */

}

for (i = 0; i < device_info.num_irqs; i++) {

struct vfio_irq_info irq = { .argsz = sizeof(irq) };

irq.index = i;

ioctl(device, VFIO_DEVICE_GET_IRQ_INFO, &irq);

/* Setup IRQs... eventfds, VFIO_DEVICE_SET_IRQS */

}

/* Gratuitous device reset and go... */

ioctl(device, VFIO_DEVICE_RESET);

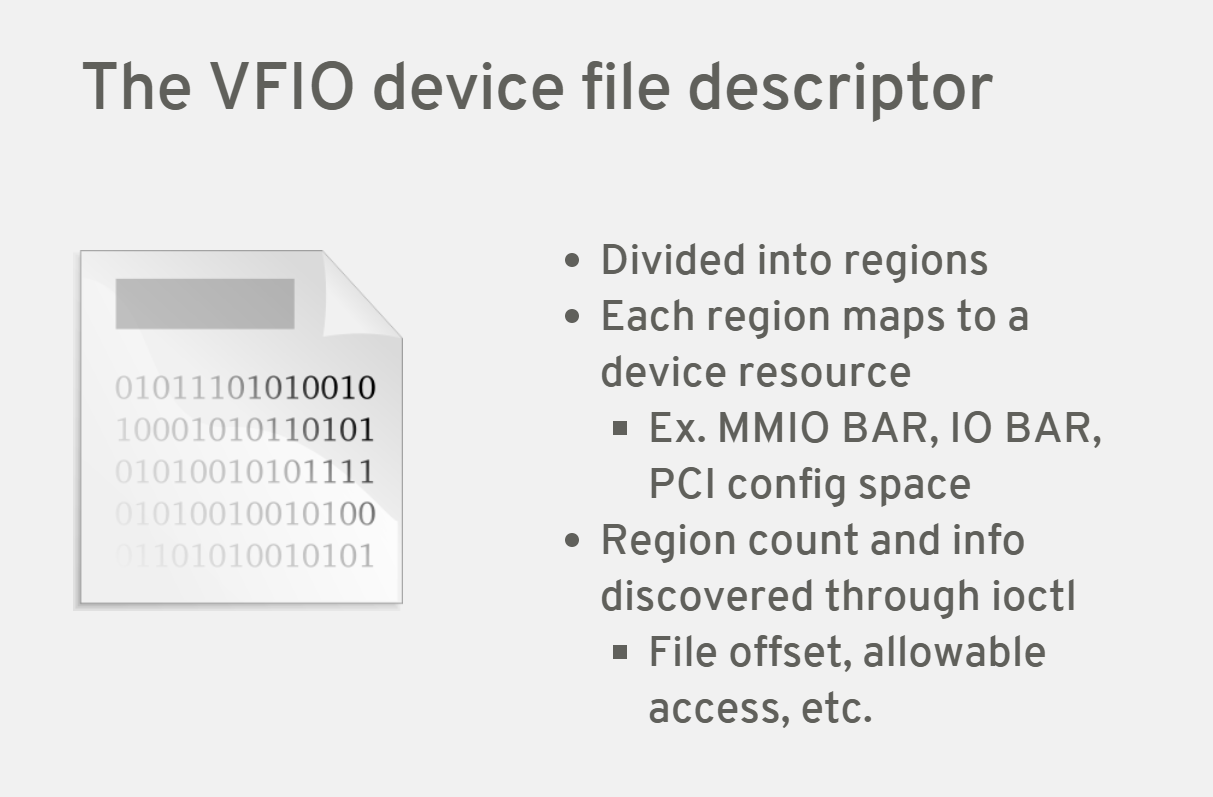

4. regions

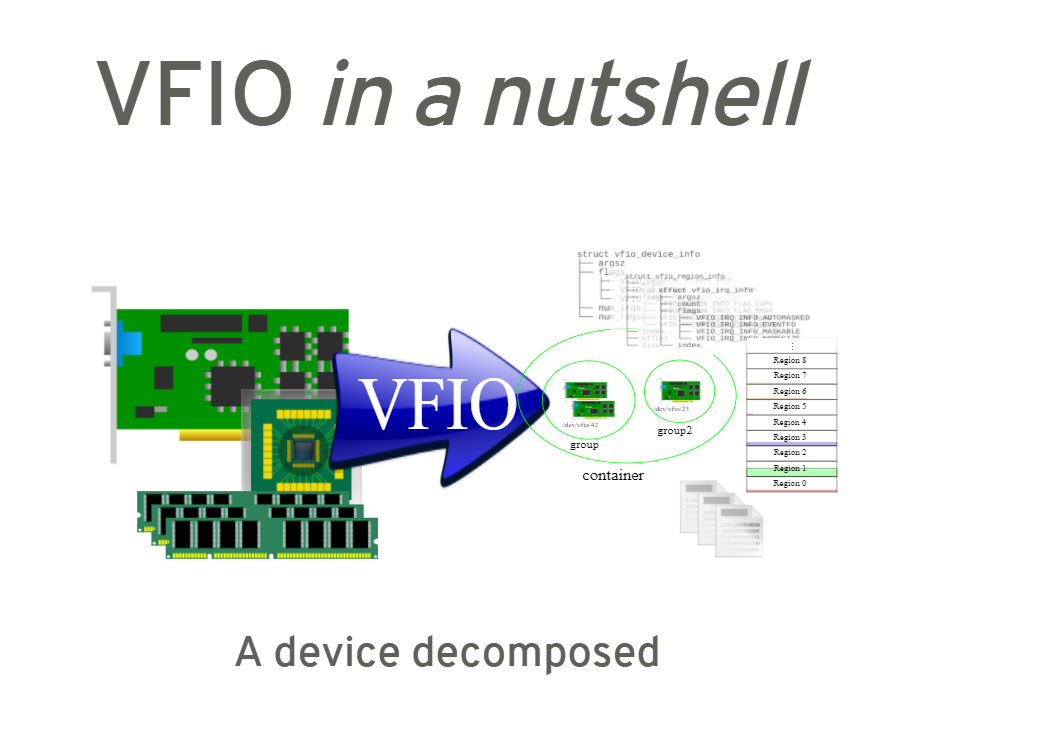

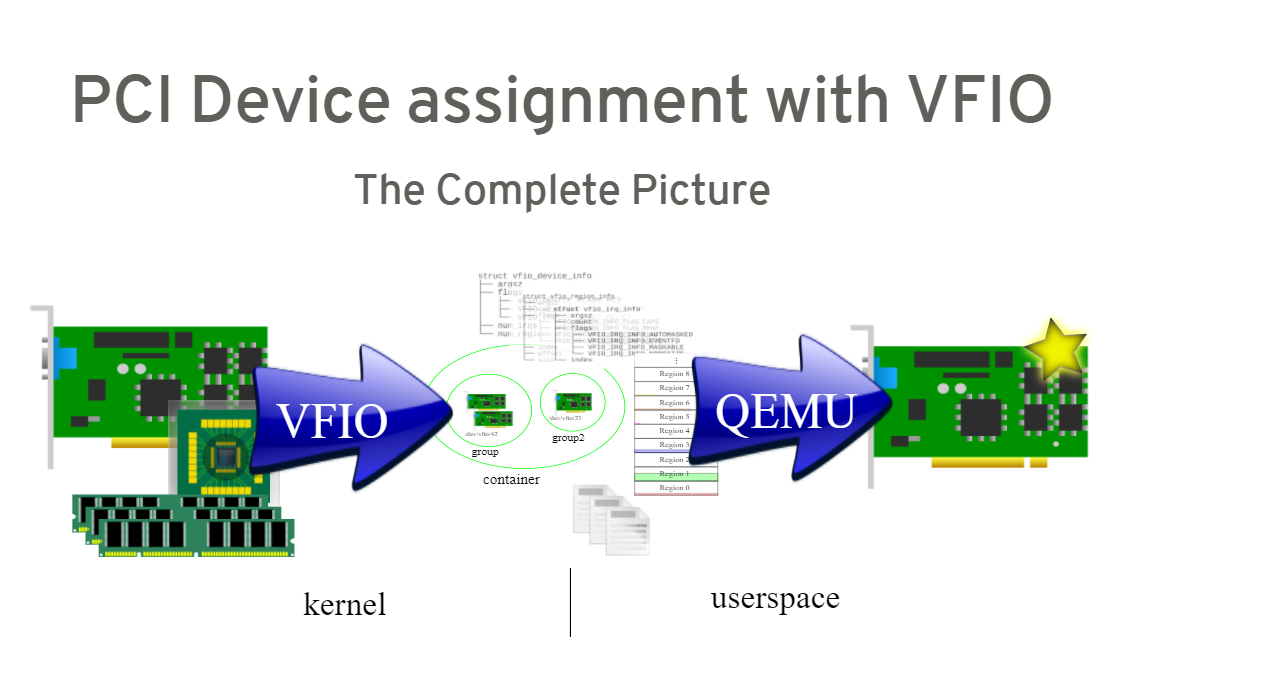

5. full picture

6. VFIO 框架简介

整个VFIO框架设计十分简洁清晰,可以用下面的一幅图描述:

1 | +-------------------------------------------+ |

最上层的是VFIO Interface Layer,它负责向用户态提供统一访问的接口,用户态通过约定的ioctl设置和调用VFIO的各种能力。

中间层分别是vfio_iommu和vfio_pci。vfio_iommu是VFIO对iommu层的统一封装,主要用来实现DMA Remapping的功能,即管理IOMMU页表的能力。 vfio_pci是VFIO对pci设备驱动的统一封装,它和用户态进程一起配合完成设备资源访问,具体包括PCI配置空间模拟、PCI Bar空间重定向,Interrupt Remapping等。

最下面的一层则是硬件驱动调用层,iommu driver是与硬件平台相关的实现,例如它可能是intel iommu driver或amd iommu driver或者ppc iommu driver。vfio_pci会调用到host上的pci_bus driver来实现设备的注册和反注册等操作。

参考资料: