Notes about multiqueue virtio-net

文章目录

本文将记录multiqueue virtio-net相关笔记。

1. Introduction

Multi-queue virtio-net provides an approach that scales the network performance as the number of vCPUs increases, by allowing them to transfer packets through more than one virtqueue pair at a time.

Today’s high-end servers have more processors, and guests running on them often have an increasing number of vCPUs. In single queue virtio-net, the scale of the protocol stack in a guest is restricted, as the network performance does not scale as the number of vCPUs increases. Guests cannot transmit or retrieve packets in parallel, as virtio-net has only one TX and RX queue.

Multi-queue support removes these bottlenecks by allowing paralleled packet processing.

Multi-queue virtio-net provides the greatest performance benefit when:

- Traffic packets are relatively large.

- The guest is active on many connections at the same time, with traffic running between guests, guest to host, or guest to an external system.

- The number of queues is equal to the number of vCPUs. This is because multi-queue support optimizes RX interrupt affinity and TX queue selection in order to make a specific queue private to a specific vCPU.

2. Parallel send/receive processing

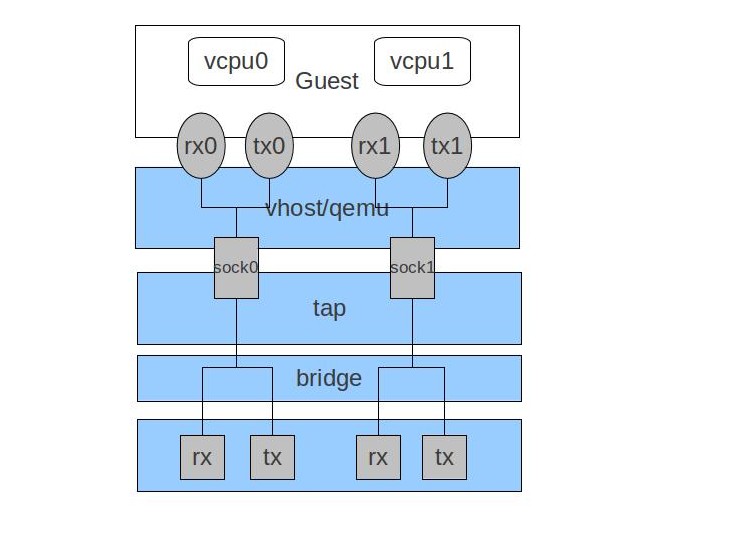

To make sure the whole stack could be worked in parallel, the parallelism of not only the front-end (guest driver) but also the back-end (vhost and tap/macvtap) must be explored. This is done by:

- Allowing multiple sockets to be attached to tap/macvtap

- Using multiple threaded vhost to serve as the backend of a multiqueue capable virtio-net adapter

- Use a multi-queue awared virtio-net driver to send and receive packets to/from each queue

3. Configuration

3.1 If using qemu

- create tap device with multiple queues, please reference

Documentation/networking/tuntap.txt:(3.3 Multiqueue tuntap interface) - enable mq for tap (suppose N queue pairs) -netdev tap,vhost=on,queues=N

- enable mq and specify msix vectors in qemu cmdline (2N+2 vectors, N for tx queues, N for rx queues, 1 for config, and one for possible control vq): -device virtio-net-pci,mq=on,vectors=2N+2…

3.2 If using libvirt

To use multi-queue virtio-net, enable support in the guest by adding the following to the guest XML configuration (where the value of N is from 1 to 256, as the kernel supports up to 256 queues for a multi-queue tap device):1

2

3

4

5<interface type='network'>

<source network='default'/>

<model type='virtio'/>

<driver name='vhost' queues='N'/>

</interface>

3.3 In guest

When running a virtual machine with N virtio-net queues in the guest, enable the multi-queue support with the following command (where the value of M is from 1 to N):1

$ ethtool -L eth0 combined M

4. Verification

1 | $ ethtool -l eth0 |

验证多队列生效的方法是观察中断,即cat /proc/interrupts

1 | $ cat /proc/interrupts |

可以观察到中断分布在4个virtio0队列设备上。

参考资料: