Notes about PV sched yield

本文将mark下kvm中的PV sched yield相关notes,参考内核版本是v6.0。

Overview

Idea:

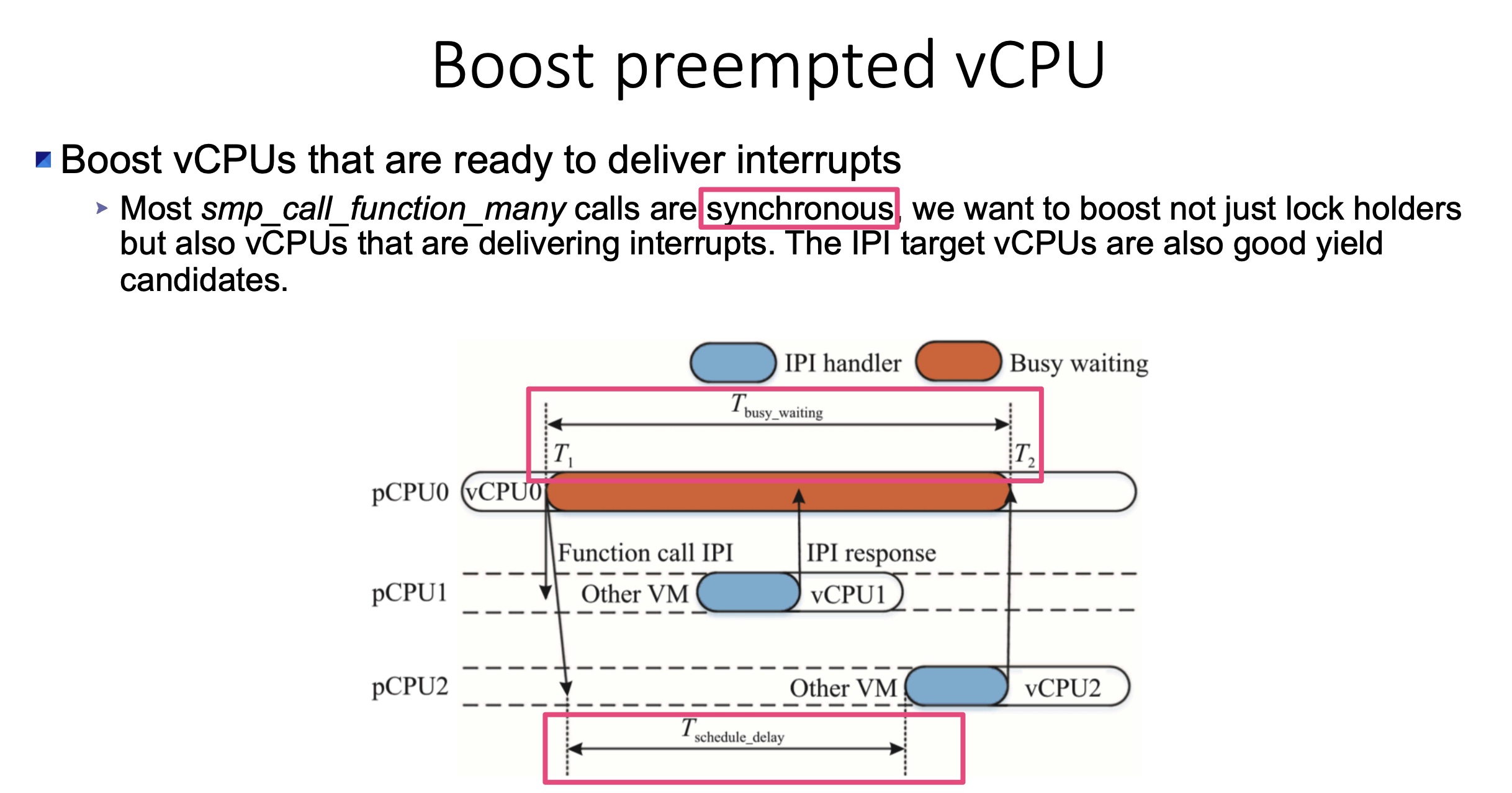

When sending a call-function IPI-many to vCPUs, yield(by hypercall) if any of the IPI target vCPU was preempted, yield to(让步于) the first preempted target vCPU which we found.

如果IPI的source vCPU不yield to the preempted target vCPU的话,source vCPU在Non-root mode下依然会busy waiting(参考smp_call_function_many_cond函数),直到preempted target vCPU被调度到Non-root mode后才结束;还不如直接yiled source vCPU,yield to the preempted target vCPU。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16static void smp_call_function_many_cond(const struct cpumask *mask,

smp_call_func_t func, void *info,

unsigned int scf_flags,

smp_cond_func_t cond_func)

{

...

if (run_remote && wait) {

// 按顺序等各个cpu修改csd的flag,不然就死等

for_each_cpu(cpu, cfd->cpumask) {

call_single_data_t *csd;

csd = &per_cpu_ptr(cfd->pcpu, cpu)->csd;

csd_lock_wait(csd);

}

}

}

下图阐述了IPI target vCPUs are good yield candidates的原因。

详情建议阅读KVM: Boost vCPUs that are delivering interrupts。

初始化

kvm side

export this feature to the guest1

2

3

4

5

6

7static inline int __do_cpuid_func(struct kvm_cpuid_array *array, u32 function)

{

...

case KVM_CPUID_FEATURES:

entry->eax = (1 << KVM_FEATURE_CLOCKSOURCE) |

...

(1 << KVM_FEATURE_PV_SCHED_YIELD) |

guest side

When the guest startup it will replace the smp_ops.send_call_func_ipi with kvm_smp_send_call_func_ipi if the PV sched yield feature supported.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18static void __init kvm_guest_init(void)

{

...

if (pv_sched_yield_supported()) {

smp_ops.send_call_func_ipi = kvm_smp_send_call_func_ipi;

pr_info("setup PV sched yield\n");

}

...

}

static bool pv_sched_yield_supported(void)

{

return (kvm_para_has_feature(KVM_FEATURE_PV_SCHED_YIELD) &&

!kvm_para_has_hint(KVM_HINTS_REALTIME) &&

kvm_para_has_feature(KVM_FEATURE_STEAL_TIME) &&

!boot_cpu_has(X86_FEATURE_MWAIT) &&

(num_possible_cpus() != 1));

}

yield to the preempted target vCPU

guest side

When the guest send call func IPI, the current vcpu will call native_send_call_func_ipi to send IPI to the target vcpu. If the target vCPU is preempted, it will issue a hypercall KVM_HC_SCHED_YIELD.

We just select the first preempted target vCPU which we found since the state of target vCPUs can change underneath and to avoid race conditions.1

2

3

4

5

6

7

8

9

10

11

12

13

14static void kvm_smp_send_call_func_ipi(const struct cpumask *mask)

{

int cpu;

native_send_call_func_ipi(mask);

/* Make sure other vCPUs get a chance to run if they need to. */

for_each_cpu(cpu, mask) {

if (!idle_cpu(cpu) && vcpu_is_preempted(cpu)) {

kvm_hypercall1(KVM_HC_SCHED_YIELD, per_cpu(x86_cpu_to_apicid, cpu));

break;

}

}

}

kvm side

kvm needs to implement the hypercall handler to process the yield hypercall.1

2

3

4

5

6

7

8

9

10

11

12int kvm_emulate_hypercall(struct kvm_vcpu *vcpu)

{

...

case KVM_HC_SCHED_YIELD:

if (!guest_pv_has(vcpu, KVM_FEATURE_PV_SCHED_YIELD))

break;

kvm_sched_yield(vcpu, a0);

ret = 0;

break;

...

}

Find the target vcpu and yield to it.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33static void kvm_sched_yield(struct kvm_vcpu *vcpu, unsigned long dest_id)

{

struct kvm_vcpu *target = NULL;

struct kvm_apic_map *map;

vcpu->stat.directed_yield_attempted++;

if (single_task_running())

goto no_yield;

rcu_read_lock();

map = rcu_dereference(vcpu->kvm->arch.apic_map);

if (likely(map) && dest_id <= map->max_apic_id && map->phys_map[dest_id])

target = map->phys_map[dest_id]->vcpu;

rcu_read_unlock();

if (!target || !READ_ONCE(target->ready))

goto no_yield;

/* Ignore requests to yield to self */

if (vcpu == target)

goto no_yield;

if (kvm_vcpu_yield_to(target) <= 0)

goto no_yield;

vcpu->stat.directed_yield_successful++;

no_yield:

return;

}

上述代码的第26行kvm_vcpu_yield_to(target),target就是目标vCPU,当前代码的执行上下文是IPI的source vCPU thread,执行完kvm_vcpu_yield_to后,即可yield to 目标vCPU。

1 | int kvm_vcpu_yield_to(struct kvm_vcpu *target) |

再看下yield_to函数:1

2

3

4

5

6

7

8

9

10

11

12

13/**

* Return:

* true (>0) if we indeed boosted the target task.

* false (0) if we failed to boost the target.

* -ESRCH if there's no task to yield to.

*/

int __sched yield_to(struct task_struct *p, bool preempt)

{

...

// yield_to_task主动放弃CPU并执行指定的task_struct

yielded = curr->sched_class->yield_to_task(rq, p, preempt);

...

}

参考资料: