Notes about CUDA Unified Memory

文章目录

本文将mark下CUDA Unified Memory相关notes。

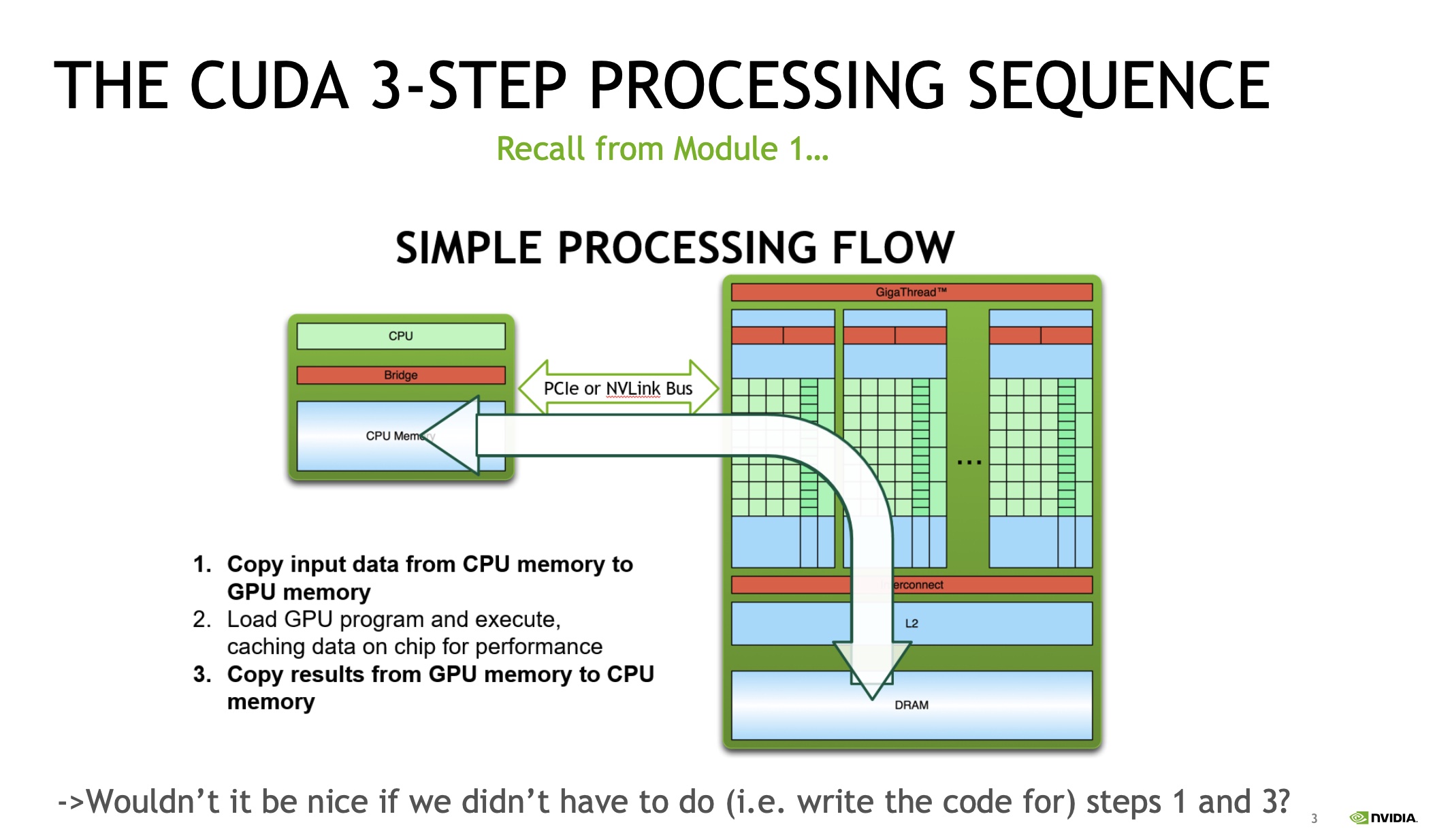

Motivation

Overview

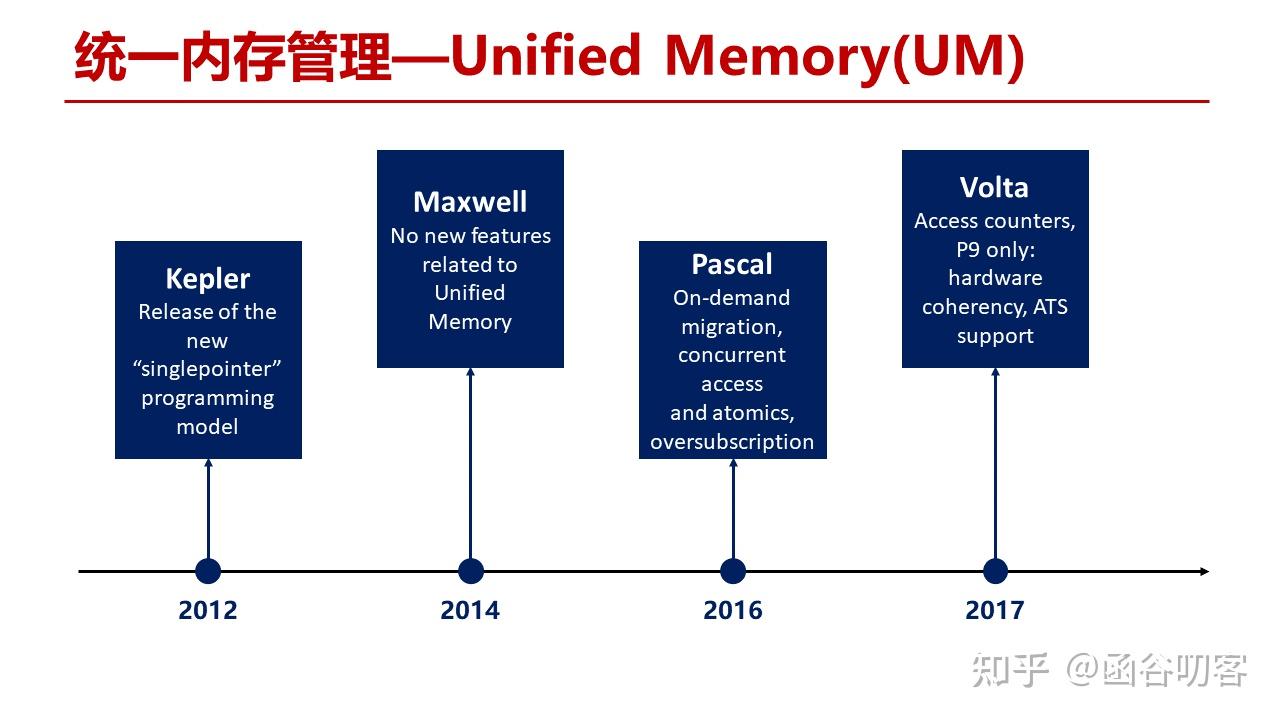

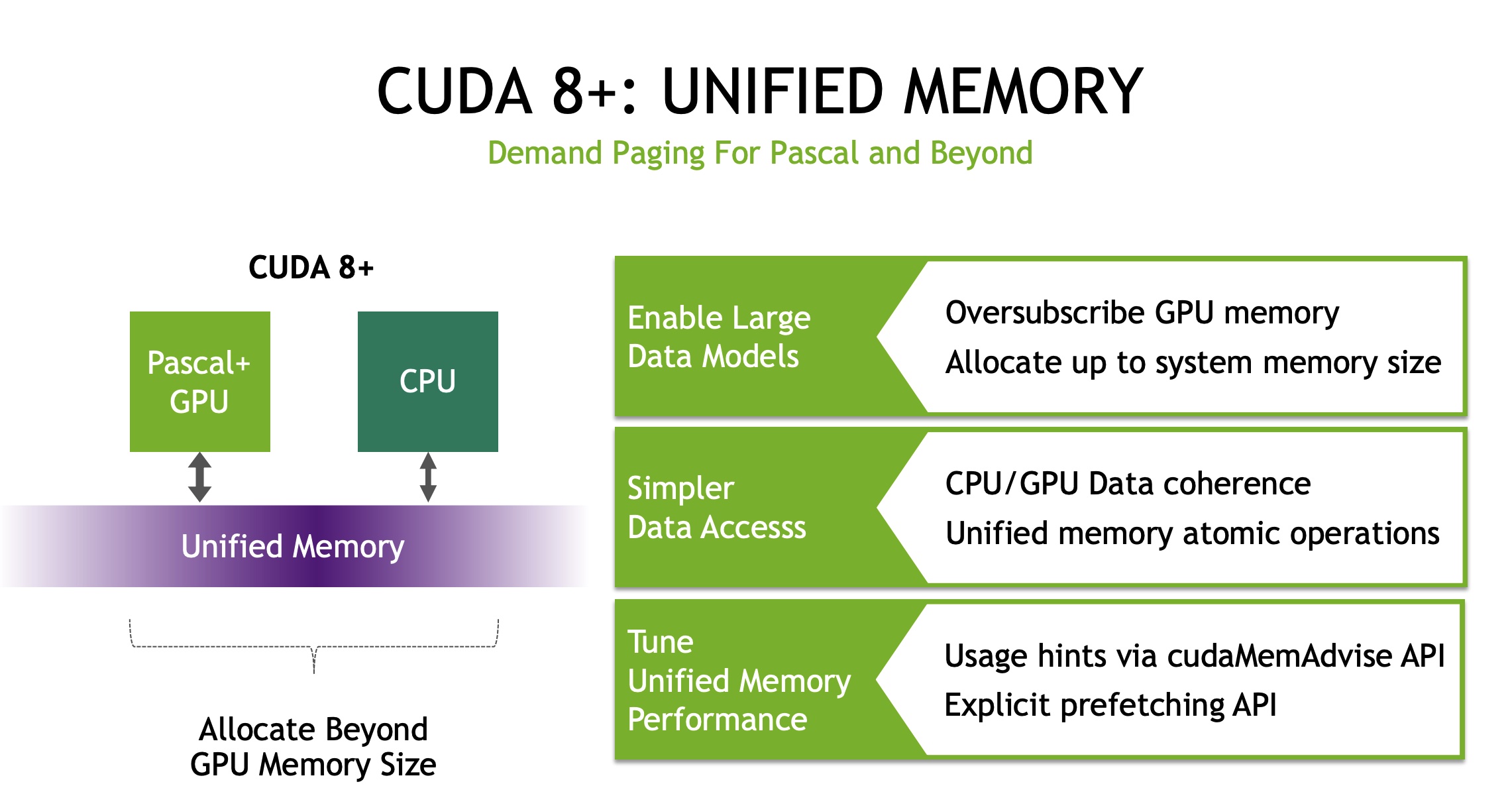

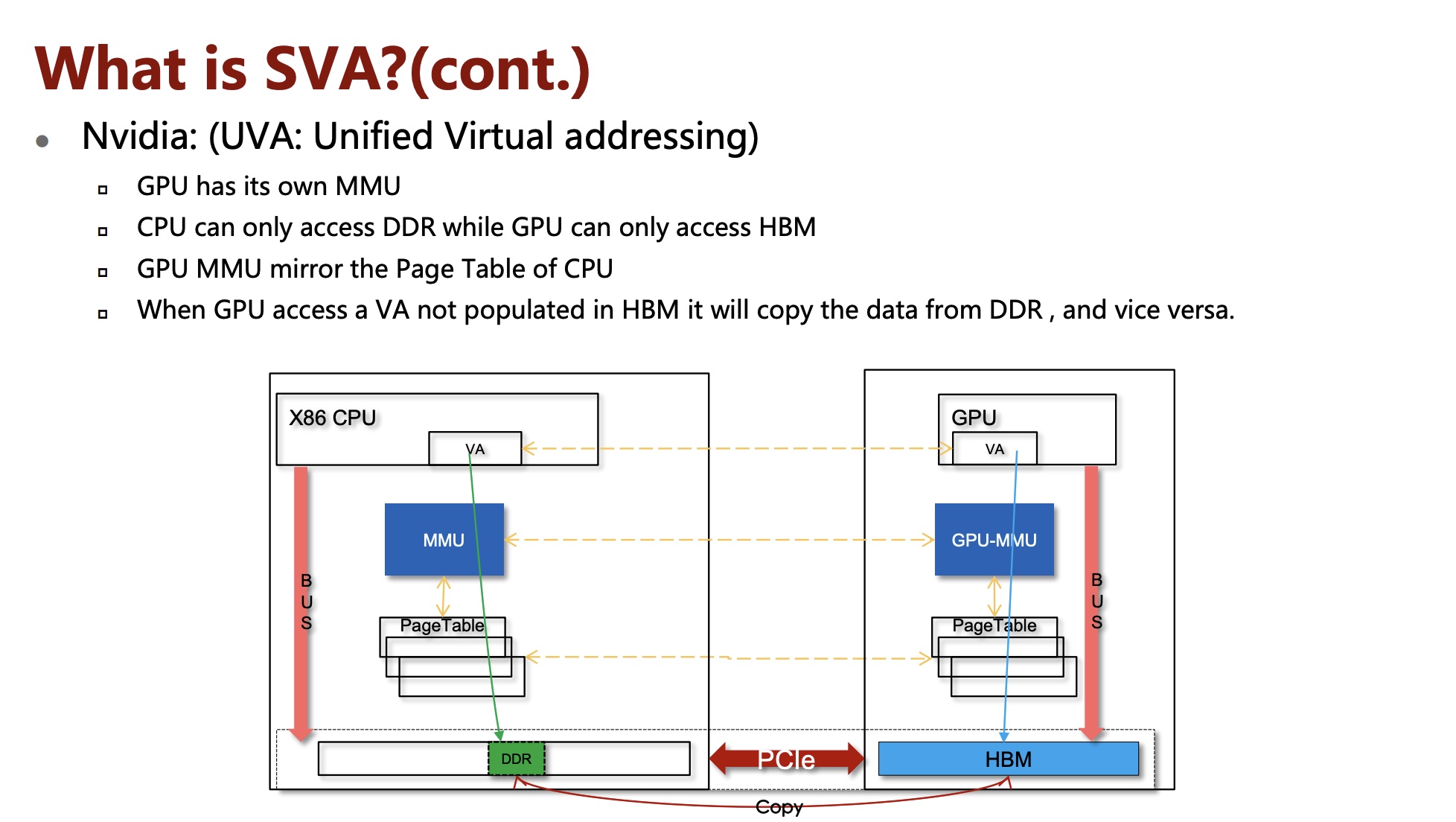

Traditionally, GPUs and CPUs have their own memory spaces, and applications running on one particular GPU cannot access the data directly from the memory of other GPUs or CPUs. To improve memory utilization, the latest NVIDIA PASCAL GPU released in 2016 supports unified memory , i.e., each GPU can access the whole memory space of both GPUs and CPUs via uniform memory addresses. In particular, the unified memory provides to all GPUs and CPUs a single memory address space, with an automatic page migration for data locality. The page migration engine also allows GPU threads to trigger page fault when the accessed data does not reside in GPU memory, and this makes the system eficiently migrate pages from anywhere in the system to the memory of GPUs in an on-demand manner.

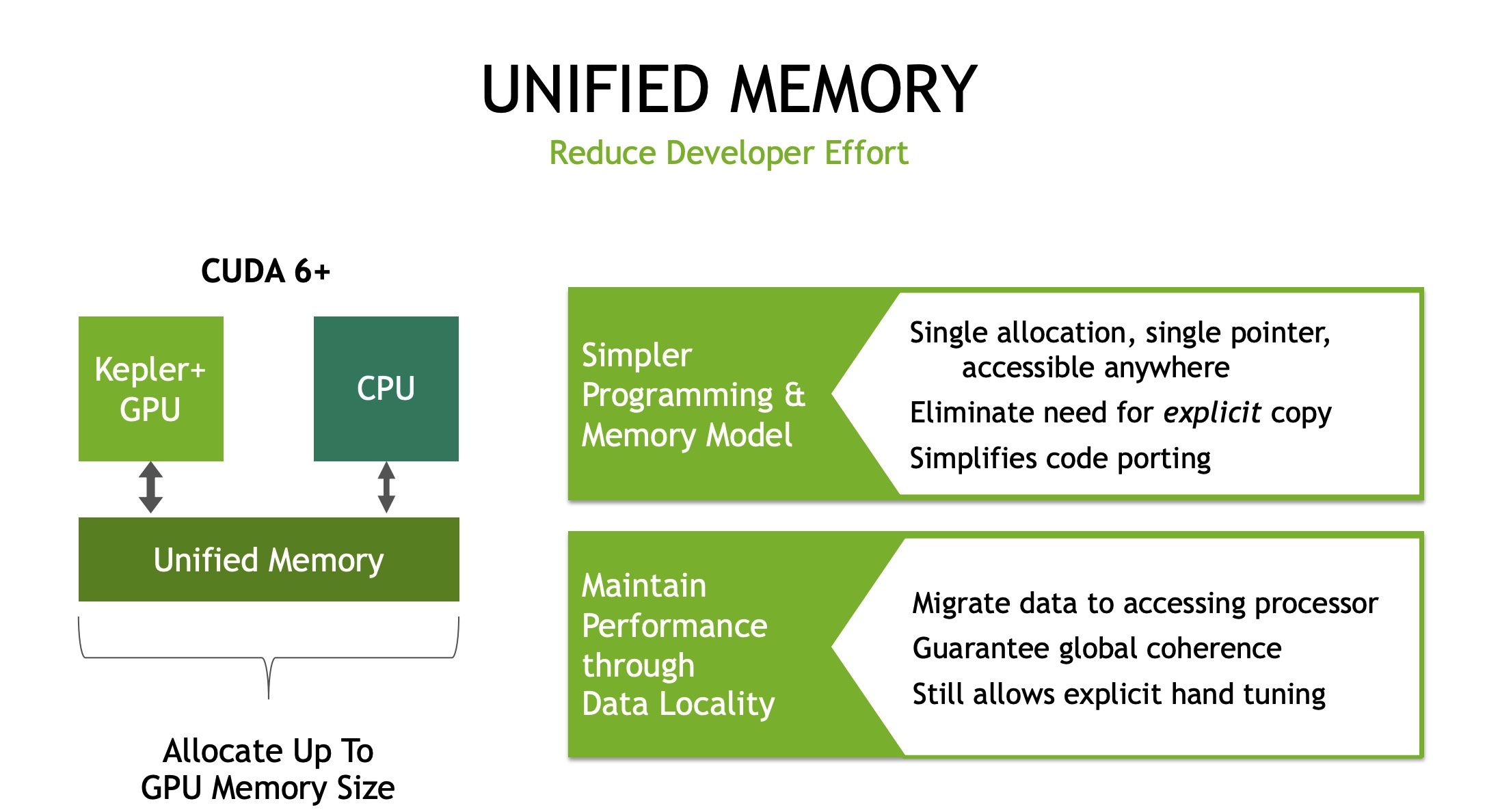

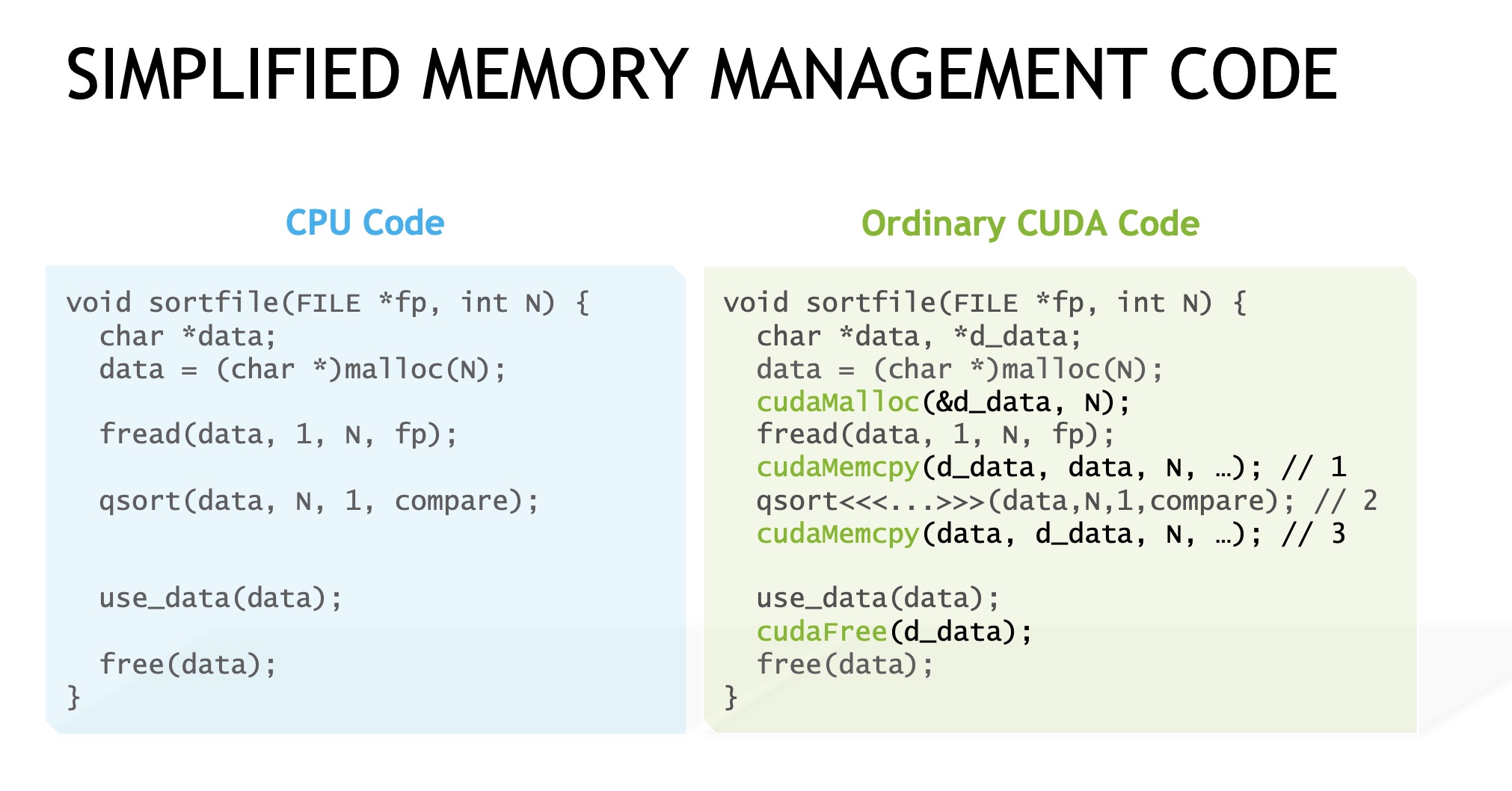

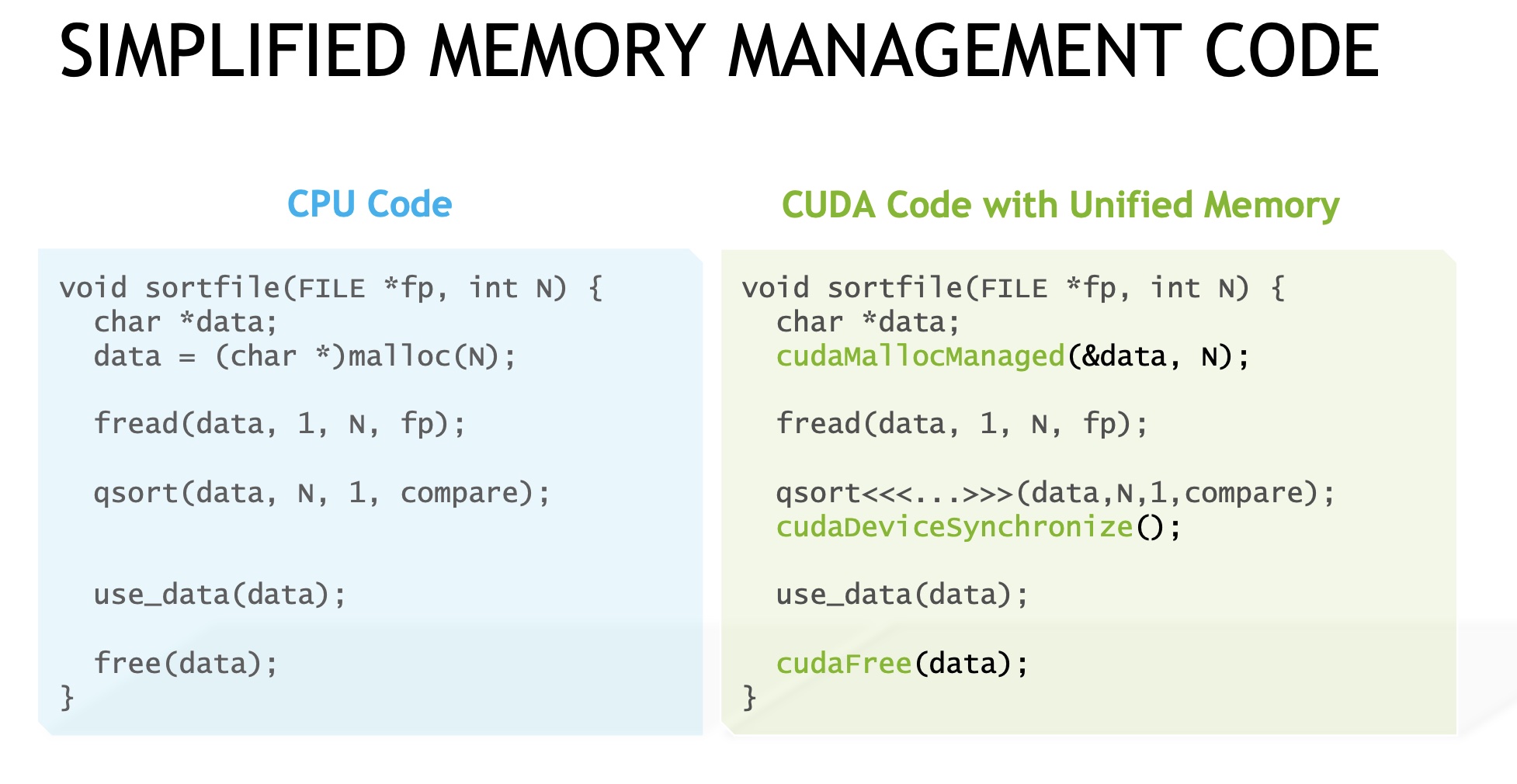

The benefits of unified memory are twofold. First, it enables a GPU to handle dataset which is larger than its own memory size, because the unified memory can migrate data from CPU memory to GPU memory in an on-demand fashion. Second, using the unified memory can simplify the programming model. In particular, programmers can simply use a pointer to access data pages no matter where they reside, instead of explicitly calling data migration.

CUDA 6+:UNIFIED MEMORY

simplify the programming model

CUDA 8+: UNIFIED MEMORY

SVA

参考资料: