Understanding the Linux Kernel 读书笔记-Memory Management

The sections “Page Frame Management” and “Memory Area Management” illustrate two different techniques for handling physically contiguous memory areas, while the section “Noncontiguous Memory Area Management” illustrates a third technique that handles noncontiguous memory areas. In these sections we’ll cover topics such as memory zones, kernel mappings, the buddy system, the slab cache, and memory pools.

1 Page Frame Management

1.1 Page Descriptors

State information of a page frame is kept in a page descriptor of type page.All page descriptors are stored in the mem_map array.

1.2 Non-Uniform Memory Access (NUMA)

The physical memory of the system is partitioned in several nodes.The physical memory inside each node can be split into several zones, each node has a descriptor of type pg_data_t.

1.3 Memory Zones

Each memory zone has its own descriptor of type zone.

1.4 The Pool of Reserved Page Frames

Some kernel control paths cannot be blocked while requesting memory—this happens, for instance, when handling an interrupt or when executing code inside a critical region. In these cases, a kernel control path should issue atomic memory allocation requests. An atomic request never blocks: if there are not enough free pages, the allocation simply fails. The kernel reserves a pool of page frames for atomic memory allocation requests to be used only on low-on-memory conditions.

1.5 The Zoned Page Frame Allocator

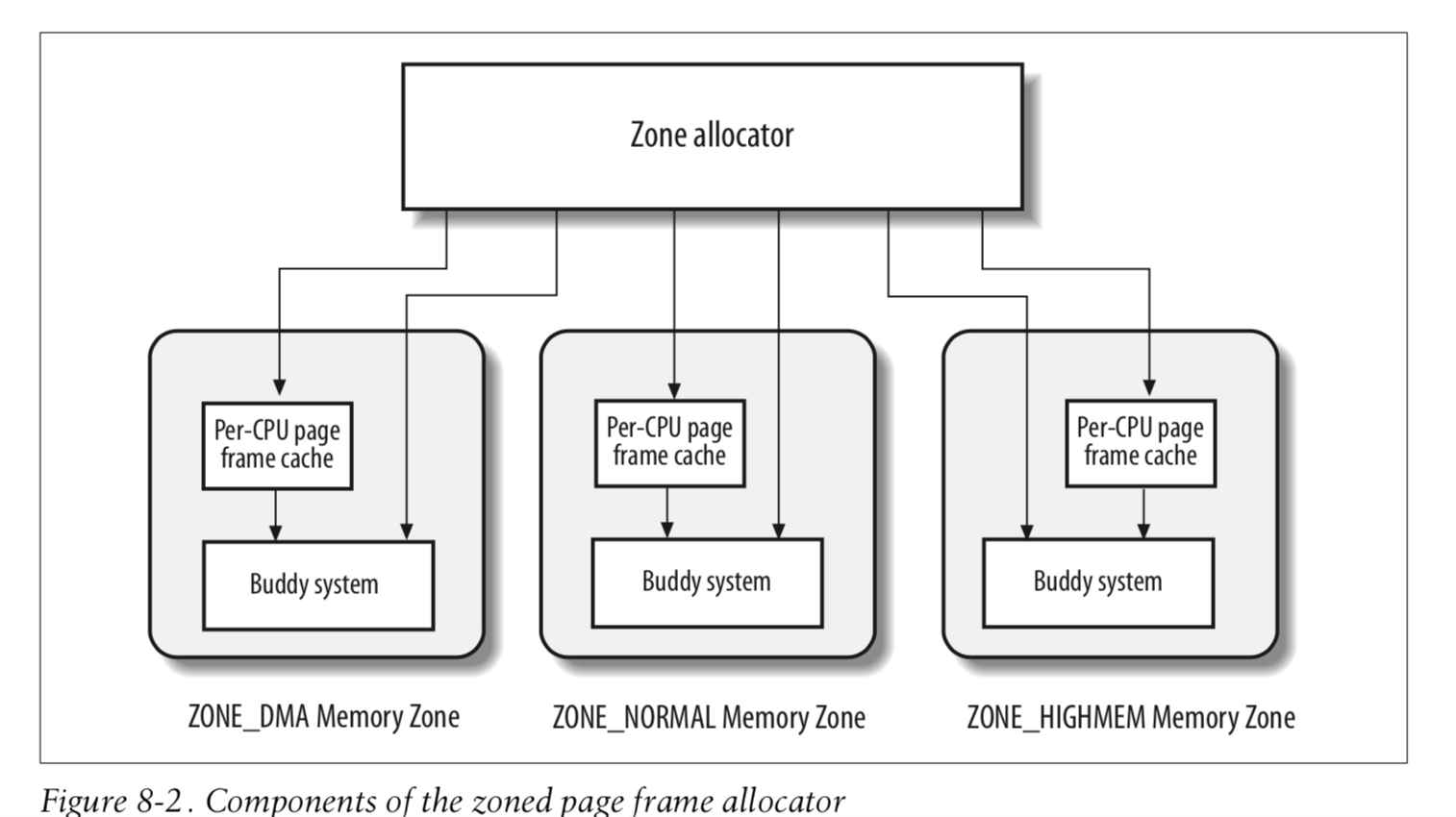

The kernel subsystem that handles the memory allocation requests for groups of contiguous page frames is called the zoned page frame allocator. Its main components are shown in Figure 8-2.

The component named “zone allocator” receives the requests for allocation and deallocation of dynamic memory. In the case of allocation requests, the component searches a memory zone that includes a group of contiguous page frames that can satisfy the request. Inside each zone, page frames are handled by a component named “buddy system”. To get better system performance, a small number of page frames are kept in cache to quickly satisfy the allocation requests for single page frames.

1.5.1 The Zone Allocator

The zone allocator is the frontend of the kernel page frame allocator. This component must locate a memory zone that includes a number of free page frames large enough to satisfy the memory request.

1.5.2 The Buddy System Algorithm

The kernel must establish a robust and efficient strategy for allocating groups of contiguous page frames. In doing so, it must deal with a well-known memory management problem called external fragmentation. The technique adopted by Linux to solve the external fragmentation problem is based on the well-known buddy system algorithm.

1.5.3 The Per-CPU Page Frame Cache

The kernel often requests and releases single page frames. To boost system performance, each memory zone defines a per-CPU page frame cache. Each per-CPU cache includes some pre-allocated page frames to be used for single memory requests issued by the local CPU.

1.6 Kernel Mappings of High-Memory Page Frames

2 Memory Area Management

Memory areas is with sequences of memory cells having contiguous physical addresses and an arbitrary length.

2.1 The Slab Allocator

The buddy system algorithm adopts the page frame as the basic memory area. This is fine for dealing with relatively large memory requests, but how are we going to deal with requests for small memory areas, say a few tens or hundreds of bytes?

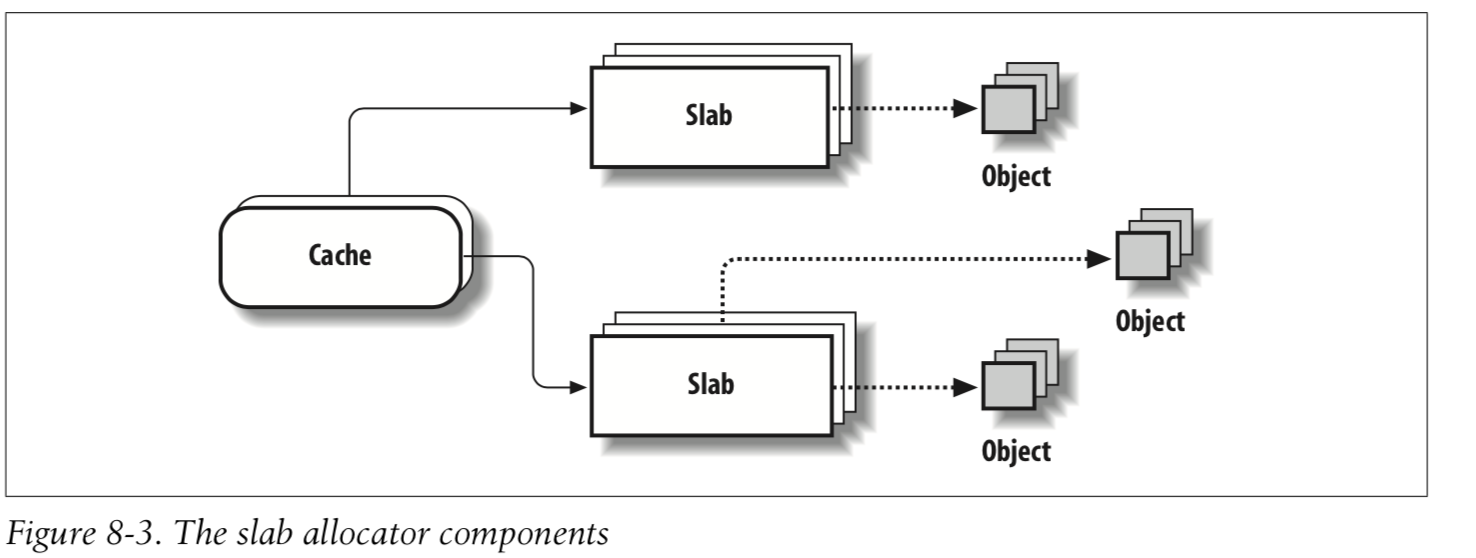

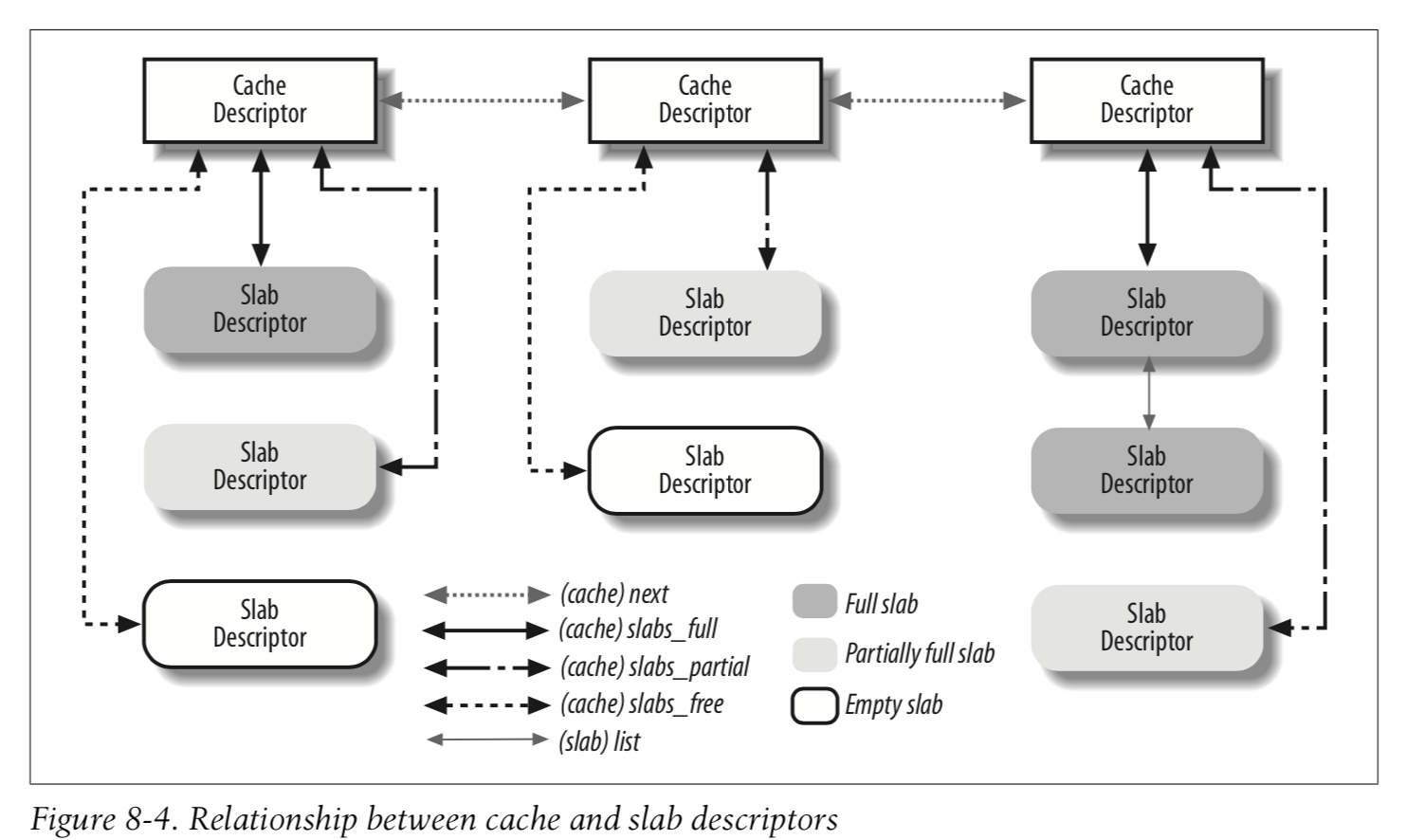

The slab allocator groups objects into caches. Each cache is a “store” of objects of the same type.

The area of main memory that contains a cache is divided into slabs; each slab consists of one or more contiguous page frames that contain both allocated and free objects.

2.1.1 Local Caches of Free Slab Objects

To reduce spin lock contention among processors and to make better use of the hardware caches, each cache of the slab allocator includes a per-CPU data structure consisting of a small array of pointers to freed objects called the slab local cache.

2.2 General Purpose Objects

kmalloc()

2.3 Memory Pools

A memory pool allows a kernel component—such as the block device subsystem—to allocate some dynamic memory to be used only in low-on-memory emergencies.

Memory pools should not be confused with the reserved page frames described in the earlier section “The Pool of Reserved Page Frames.” In fact, those page frames can be used only to satisfy atomic memory allocation requests issued by interrupt handlers or inside critical regions. Instead, a memory pool is a reserve of dynamic memory that can be used only by a specific kernel component.

Often, a memory pool is stacked over the slab allocator—that is, it is used to keep a reserve of slab objects. Generally speaking, however, a memory pool can be used to allocate every kind of dynamic memory.

3 Noncontiguous Memory Area Management

vmalloc()